Public Member Functions | |

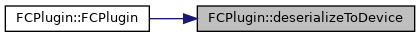

| FCPlugin (const nvinfer1::Weights *weights, int nbWeights, int nbOutputChannels) | |

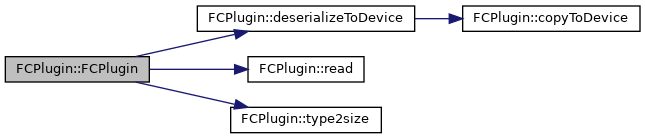

| FCPlugin (const void *data, size_t length) | |

| ~FCPlugin () | |

| int | getNbOutputs () const override |

| Get the number of outputs from the layer. More... | |

| nvinfer1::Dims | getOutputDimensions (int index, const nvinfer1::Dims *inputs, int nbInputDims) override |

| bool | supportsFormat (nvinfer1::DataType type, nvinfer1::PluginFormat format) const override |

| Check format support. More... | |

| void | configureWithFormat (const nvinfer1::Dims *inputDims, int nbInputs, const nvinfer1::Dims *outputDims, int nbOutputs, nvinfer1::DataType type, nvinfer1::PluginFormat format, int maxBatchSize) override |

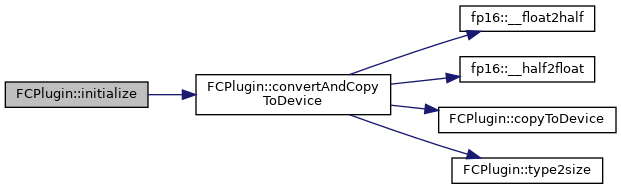

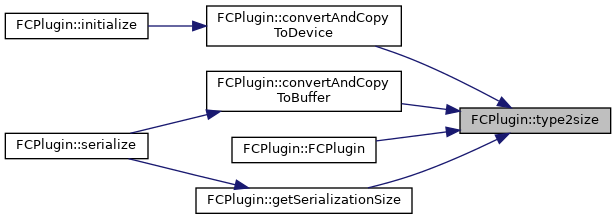

| int | initialize () override |

| Initialize the layer for execution. More... | |

| virtual void | terminate () override |

| Release resources acquired during plugin layer initialization. More... | |

| virtual size_t | getWorkspaceSize (int maxBatchSize) const override |

| virtual int | enqueue (int batchSize, const void *const *inputs, void **outputs, void *workspace, cudaStream_t stream) override |

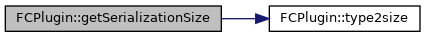

| virtual size_t | getSerializationSize () override |

| Find the size of the serialization buffer required. More... | |

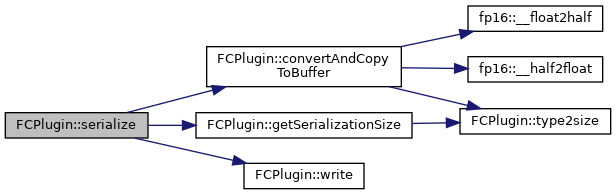

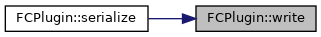

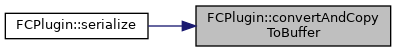

| virtual void | serialize (void *buffer) override |

| Serialize the layer. More... | |

| virtual int32_t | getTensorRTVersion () const |

| Return the API version with which this plugin was built. More... | |

| virtual void | configureWithFormat (const Dims *inputDims, int32_t nbInputs, const Dims *outputDims, int32_t nbOutputs, DataType type, PluginFormat format, int32_t maxBatchSize)=0 |

| Configure the layer. More... | |

| virtual Dims | getOutputDimensions (int32_t index, const Dims *inputs, int32_t nbInputDims)=0 |

| Get the dimension of an output tensor. More... | |

| virtual size_t | getWorkspaceSize (int32_t maxBatchSize) const =0 |

| Find the workspace size required by the layer. More... | |

| virtual int32_t | enqueue (int32_t batchSize, const void *const *inputs, void **outputs, void *workspace, cudaStream_t stream)=0 |

| Execute the layer. More... | |

Protected Member Functions | |

| void | configure (const Dims *, int32_t, const Dims *, int32_t, int32_t) |

| Derived classes should not implement this. More... | |

Private Member Functions | |

| size_t | type2size (nvinfer1::DataType type) |

| template<typename T > | |

| void | write (char *&buffer, const T &val) |

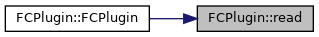

| template<typename T > | |

| void | read (const char *&buffer, T &val) |

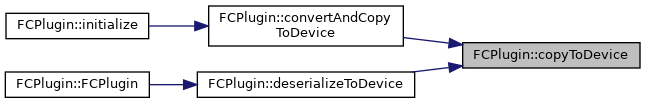

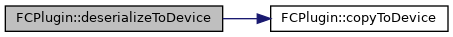

| void * | copyToDevice (const void *data, size_t count) |

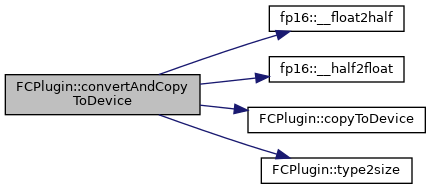

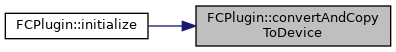

| void | convertAndCopyToDevice (void *&deviceWeights, const nvinfer1::Weights &weights) |

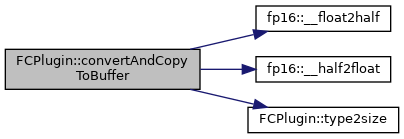

| void | convertAndCopyToBuffer (char *&buffer, const nvinfer1::Weights &weights) |

| void | deserializeToDevice (const char *&hostBuffer, void *&deviceWeights, size_t size) |

Private Attributes | |

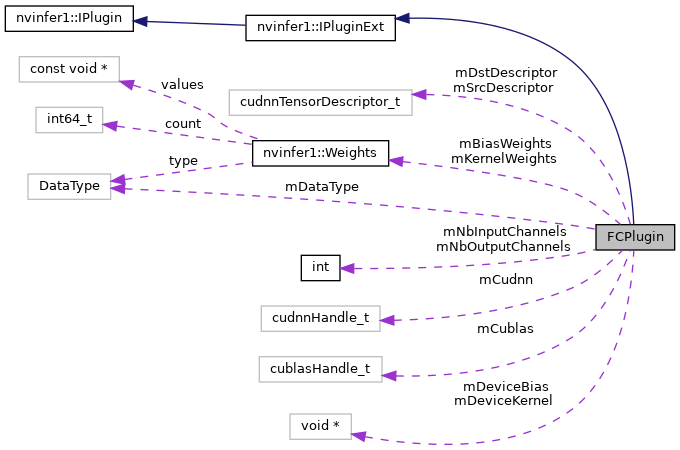

| int | mNbOutputChannels |

| int | mNbInputChannels |

| nvinfer1::Weights | mKernelWeights |

| nvinfer1::Weights | mBiasWeights |

| nvinfer1::DataType | mDataType {nvinfer1::DataType::kFLOAT} |

| void * | mDeviceKernel {nullptr} |

| void * | mDeviceBias {nullptr} |

| cudnnHandle_t | mCudnn |

| cublasHandle_t | mCublas |

| cudnnTensorDescriptor_t | mSrcDescriptor |

| cudnnTensorDescriptor_t | mDstDescriptor |

|

inline |

|

inline |

|

inline |

|

inlineoverridevirtual |

Get the number of outputs from the layer.

This function is called by the implementations of INetworkDefinition and IBuilder. In particular, it is called prior to any call to initialize().

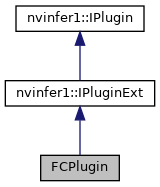

Implements nvinfer1::IPlugin.

|

inlineoverride |

|

inlineoverridevirtual |

Check format support.

| type | DataType requested. |

| format | PluginFormat requested. |

This function is called by the implementations of INetworkDefinition, IBuilder, and ICudaEngine. In particular, it is called when creating an engine and when deserializing an engine.

Implements nvinfer1::IPluginExt.

|

inlineoverride |

|

inlineoverridevirtual |

Initialize the layer for execution.

This is called when the engine is created.

Implements nvinfer1::IPlugin.

|

inlineoverridevirtual |

Release resources acquired during plugin layer initialization.

This is called when the engine is destroyed.

Implements nvinfer1::IPlugin.

|

inlineoverridevirtual |

|

inlineoverridevirtual |

|

inlineoverridevirtual |

Find the size of the serialization buffer required.

Implements nvinfer1::IPlugin.

|

inlineoverridevirtual |

Serialize the layer.

| buffer | A pointer to a buffer of size at least that returned by getSerializationSize(). |

Implements nvinfer1::IPlugin.

|

inlineprivate |

|

inlineprivate |

|

inlineprivate |

|

inlineprivate |

|

inlineprivate |

|

inlineprivate |

|

inlineprivate |

|

inlinevirtualinherited |

Return the API version with which this plugin was built.

Do not override this method as it is used by the TensorRT library to maintain backwards-compatibility with plugins.

|

pure virtualinherited |

Configure the layer.

This function is called by the builder prior to initialize(). It provides an opportunity for the layer to make algorithm choices on the basis of its weights, dimensions, and maximum batch size.

| inputDims | The input tensor dimensions. |

| nbInputs | The number of inputs. |

| outputDims | The output tensor dimensions. |

| nbOutputs | The number of outputs. |

| type | The data type selected for the engine. |

| format | The format selected for the engine. |

| maxBatchSize | The maximum batch size. |

The dimensions passed here do not include the outermost batch size (i.e. for 2-D image networks, they will be 3-dimensional CHW dimensions).

|

inlineprotectedvirtualinherited |

Derived classes should not implement this.

In a C++11 API it would be override final.

Implements nvinfer1::IPlugin.

|

pure virtualinherited |

Get the dimension of an output tensor.

| index | The index of the output tensor. |

| inputs | The input tensors. |

| nbInputDims | The number of input tensors. |

This function is called by the implementations of INetworkDefinition and IBuilder. In particular, it is called prior to any call to initialize().

|

pure virtualinherited |

Find the workspace size required by the layer.

This function is called during engine startup, after initialize(). The workspace size returned should be sufficient for any batch size up to the maximum.

|

pure virtualinherited |

Execute the layer.

| batchSize | The number of inputs in the batch. |

| inputs | The memory for the input tensors. |

| outputs | The memory for the output tensors. |

| workspace | Workspace for execution. |

| stream | The stream in which to execute the kernels. |

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |