Public Types | |

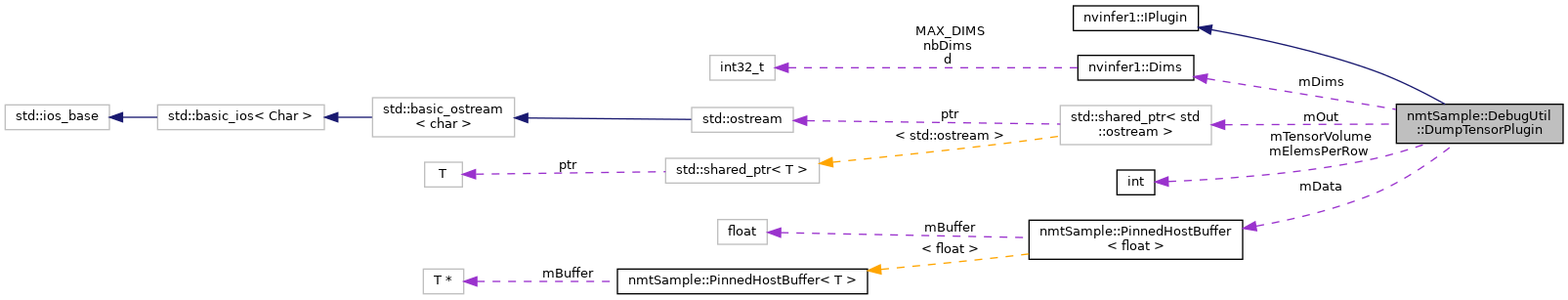

| typedef std::shared_ptr< DumpTensorPlugin > | ptr |

Public Member Functions | |

| DumpTensorPlugin (std::shared_ptr< std::ostream > out) | |

| ~DumpTensorPlugin () override=default | |

| int | getNbOutputs () const override |

| Get the number of outputs from the layer. More... | |

| nvinfer1::Dims | getOutputDimensions (int index, const nvinfer1::Dims *inputs, int nbInputDims) override |

| void | configure (const nvinfer1::Dims *inputDims, int nbInputs, const nvinfer1::Dims *outputDims, int nbOutputs, int maxBatchSize) override |

| int | initialize () override |

| Initialize the layer for execution. More... | |

| void | terminate () override |

| Release resources acquired during plugin layer initialization. More... | |

| size_t | getWorkspaceSize (int maxBatchSize) const override |

| int | enqueue (int batchSize, const void *const *inputs, void **outputs, void *workspace, cudaStream_t stream) override |

| size_t | getSerializationSize () override |

| Find the size of the serialization buffer required. More... | |

| void | serialize (void *buffer) override |

| Serialize the layer. More... | |

| virtual Dims | getOutputDimensions (int32_t index, const Dims *inputs, int32_t nbInputDims)=0 |

| Get the dimension of an output tensor. More... | |

| virtual void | configure (const Dims *inputDims, int32_t nbInputs, const Dims *outputDims, int32_t nbOutputs, int32_t maxBatchSize)=0 |

| Configure the layer. More... | |

| virtual size_t | getWorkspaceSize (int32_t maxBatchSize) const =0 |

| Find the workspace size required by the layer. More... | |

| virtual int32_t | enqueue (int32_t batchSize, const void *const *inputs, void **outputs, void *workspace, cudaStream_t stream)=0 |

| Execute the layer. More... | |

Private Attributes | |

| std::shared_ptr< std::ostream > | mOut |

| nvinfer1::Dims | mDims |

| int | mTensorVolume |

| int | mElemsPerRow |

| PinnedHostBuffer< float >::ptr | mData |

| typedef std::shared_ptr<DumpTensorPlugin> nmtSample::DebugUtil::DumpTensorPlugin::ptr |

| nmtSample::DebugUtil::DumpTensorPlugin::DumpTensorPlugin | ( | std::shared_ptr< std::ostream > | out | ) |

|

overridedefault |

|

overridevirtual |

Get the number of outputs from the layer.

This function is called by the implementations of INetworkDefinition and IBuilder. In particular, it is called prior to any call to initialize().

Implements nvinfer1::IPlugin.

|

override |

|

override |

|

overridevirtual |

Initialize the layer for execution.

This is called when the engine is created.

Implements nvinfer1::IPlugin.

|

overridevirtual |

Release resources acquired during plugin layer initialization.

This is called when the engine is destroyed.

Implements nvinfer1::IPlugin.

|

override |

|

override |

|

overridevirtual |

Find the size of the serialization buffer required.

Implements nvinfer1::IPlugin.

|

overridevirtual |

Serialize the layer.

| buffer | A pointer to a buffer of size at least that returned by getSerializationSize(). |

Implements nvinfer1::IPlugin.

|

pure virtualinherited |

Get the dimension of an output tensor.

| index | The index of the output tensor. |

| inputs | The input tensors. |

| nbInputDims | The number of input tensors. |

This function is called by the implementations of INetworkDefinition and IBuilder. In particular, it is called prior to any call to initialize().

|

pure virtualinherited |

Configure the layer.

This function is called by the builder prior to initialize(). It provides an opportunity for the layer to make algorithm choices on the basis of its weights, dimensions, and maximum batch size. The type is assumed to be FP32 and format NCHW.

| inputDims | The input tensor dimensions. |

| nbInputs | The number of inputs. |

| outputDims | The output tensor dimensions. |

| nbOutputs | The number of outputs. |

| maxBatchSize | The maximum batch size. |

The dimensions passed here do not include the outermost batch size (i.e. for 2-D image networks, they will be 3-dimensional CHW dimensions).

This method is not called for PluginExt classes, configureWithFormat is called instead.

Implemented in nvinfer1::IPluginExt.

|

pure virtualinherited |

Find the workspace size required by the layer.

This function is called during engine startup, after initialize(). The workspace size returned should be sufficient for any batch size up to the maximum.

|

pure virtualinherited |

Execute the layer.

| batchSize | The number of inputs in the batch. |

| inputs | The memory for the input tensors. |

| outputs | The memory for the output tensors. |

| workspace | Workspace for execution. |

| stream | The stream in which to execute the kernels. |

|

private |

|

private |

|

private |

|

private |

|

private |