Classes | |

| class | MeasureTime |

Functions | |

| def | parse_args () |

| def | question_features (tokens, question) |

| def | inference (features, tokens) |

| def | print_single_query (eval_time_elapsed, prediction, nbest_json) |

| def | parse_args (parser) |

| def | checkpoint_from_distributed (state_dict) |

| def | unwrap_distributed (state_dict) |

| def | load_and_setup_model (model_name, parser, checkpoint, fp16_run, cpu_run, forward_is_infer=False) |

| def | pad_sequences (batch) |

| def | prepare_input_sequence (texts, cpu_run=False) |

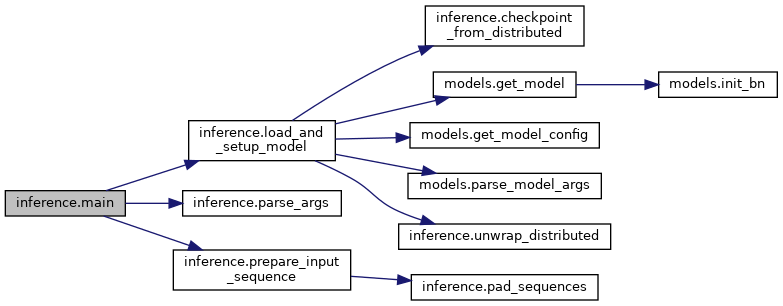

| def | main () |

Variables | |

| TRT_LOGGER = trt.Logger(trt.Logger.INFO) | |

| def | args = parse_args() |

| paragraph_text = None | |

| squad_examples = None | |

| output_prediction_file = None | |

| f = open(args.passage_file, 'r') | |

| question_text = None | |

| tokenizer = tokenization.FullTokenizer(vocab_file=args.vocab_file, do_lower_case=True) | |

| int | doc_stride = 128 |

| def | max_seq_length = args.sequence_length |

| handle = ctypes.CDLL("libnvinfer_plugin.so", mode=ctypes.RTLD_GLOBAL) | |

| int | selected_profile = -1 |

| num_binding_per_profile = engine.num_bindings // engine.num_optimization_profiles | |

| profile_shape = engine.get_profile_shape(profile_index = idx, binding = idx * num_binding_per_profile) | |

| active_optimization_profile | |

| int | binding_idx_offset = selected_profile * num_binding_per_profile |

| tuple | input_shape = (max_seq_length, args.batch_size) |

| input_nbytes = trt.volume(input_shape) * trt.int32.itemsize | |

| stream = cuda.Stream() | |

| list | d_inputs = [cuda.mem_alloc(input_nbytes) for binding in range(3)] |

| h_output = cuda.pagelocked_empty(tuple(context.get_binding_shape(binding_idx_offset + 3)), dtype=np.float32) | |

| d_output = cuda.mem_alloc(h_output.nbytes) | |

| all_predictions = collections.OrderedDict() | |

| def | features = question_features(example.doc_tokens, example.question_text) |

| eval_time_elapsed | |

| prediction | |

| nbest_json | |

| doc_tokens = dp.convert_doc_tokens(paragraph_text) | |

| list | EXIT_CMDS = ["exit", "quit"] |

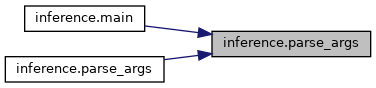

| def inference.parse_args | ( | ) |

Parse command line arguments

| def inference.question_features | ( | tokens, | |

| question | |||

| ) |

| def inference.inference | ( | features, | |

| tokens | |||

| ) |

| def inference.print_single_query | ( | eval_time_elapsed, | |

| prediction, | |||

| nbest_json | |||

| ) |

| def inference.parse_args | ( | parser | ) |

Parse commandline arguments.

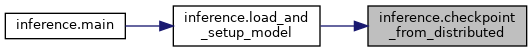

| def inference.checkpoint_from_distributed | ( | state_dict | ) |

Checks whether checkpoint was generated by DistributedDataParallel. DDP wraps model in additional "module.", it needs to be unwrapped for single GPU inference. :param state_dict: model's state dict

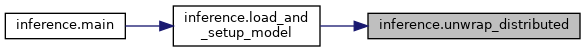

| def inference.unwrap_distributed | ( | state_dict | ) |

Unwraps model from DistributedDataParallel. DDP wraps model in additional "module.", it needs to be removed for single GPU inference. :param state_dict: model's state dict

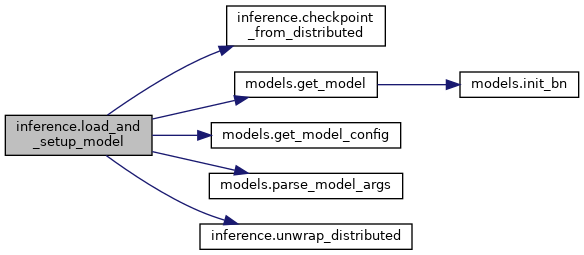

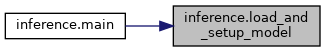

| def inference.load_and_setup_model | ( | model_name, | |

| parser, | |||

| checkpoint, | |||

| fp16_run, | |||

| cpu_run, | |||

forward_is_infer = False |

|||

| ) |

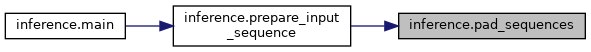

| def inference.pad_sequences | ( | batch | ) |

| def inference.prepare_input_sequence | ( | texts, | |

cpu_run = False |

|||

| ) |

| def inference.main | ( | ) |

Launches text to speech (inference). Inference is executed on a single GPU or CPU.

| inference.TRT_LOGGER = trt.Logger(trt.Logger.INFO) |

| def inference.args = parse_args() |

| string inference.paragraph_text = None |

| inference.squad_examples = None |

| def inference.output_prediction_file = None |

| inference.f = open(args.passage_file, 'r') |

| string inference.question_text = None |

| inference.tokenizer = tokenization.FullTokenizer(vocab_file=args.vocab_file, do_lower_case=True) |

| int inference.doc_stride = 128 |

| def inference.max_seq_length = args.sequence_length |

| inference.handle = ctypes.CDLL("libnvinfer_plugin.so", mode=ctypes.RTLD_GLOBAL) |

| inference.selected_profile = -1 |

| inference.num_binding_per_profile = engine.num_bindings // engine.num_optimization_profiles |

| inference.profile_shape = engine.get_profile_shape(profile_index = idx, binding = idx * num_binding_per_profile) |

| inference.active_optimization_profile |

| int inference.binding_idx_offset = selected_profile * num_binding_per_profile |

| tuple inference.input_shape = (max_seq_length, args.batch_size) |

| inference.input_nbytes = trt.volume(input_shape) * trt.int32.itemsize |

| inference.stream = cuda.Stream() |

| list inference.d_inputs = [cuda.mem_alloc(input_nbytes) for binding in range(3)] |

| inference.h_output = cuda.pagelocked_empty(tuple(context.get_binding_shape(binding_idx_offset + 3)), dtype=np.float32) |

| inference.d_output = cuda.mem_alloc(h_output.nbytes) |

| inference.all_predictions = collections.OrderedDict() |

| def inference.features = question_features(example.doc_tokens, example.question_text) |

| inference.eval_time_elapsed |

| inference.prediction |

| inference.nbest_json |

| inference.doc_tokens = dp.convert_doc_tokens(paragraph_text) |

| list inference.EXIT_CMDS = ["exit", "quit"] |