|

| | QuantDescriptor = ScaledQuantDescriptor |

| |

| | QUANT_DESC_8BIT_PER_TENSOR = QuantDescriptor(num_bits=8) |

| |

| | QUANT_DESC_UNSIGNED_8BIT_PER_TENSOR = QuantDescriptor(num_bits=8, unsigned=True) |

| |

| | QUANT_DESC_8BIT_CONV1D_WEIGHT_PER_CHANNEL = QuantDescriptor(num_bits=8, axis=(0)) |

| |

| | QUANT_DESC_8BIT_CONV2D_WEIGHT_PER_CHANNEL = QuantDescriptor(num_bits=8, axis=(0)) |

| |

| | QUANT_DESC_8BIT_CONV3D_WEIGHT_PER_CHANNEL = QuantDescriptor(num_bits=8, axis=(0)) |

| |

| | QUANT_DESC_8BIT_LINEAR_WEIGHT_PER_ROW = QuantDescriptor(num_bits=8, axis=(0)) |

| |

| | QUANT_DESC_8BIT_CONVTRANSPOSE1D_WEIGHT_PER_CHANNEL = QuantDescriptor(num_bits=8, axis=(1)) |

| |

| | QUANT_DESC_8BIT_CONVTRANSPOSE2D_WEIGHT_PER_CHANNEL = QuantDescriptor(num_bits=8, axis=(1)) |

| |

| | QUANT_DESC_8BIT_CONVTRANSPOSE3D_WEIGHT_PER_CHANNEL = QuantDescriptor(num_bits=8, axis=(1)) |

| |

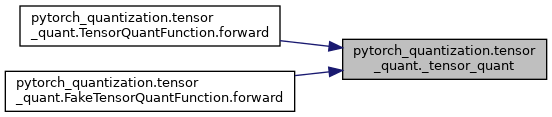

| | tensor_quant = amp.promote_function(TensorQuantFunction.apply) |

| |

| | fake_tensor_quant = amp.promote_function(FakeTensorQuantFunction.apply) |

| |

| | fake_affine_tensor_quant = amp.promote_function(FakeAffineTensorQuantFunction.apply) |

| |