◆ clear()

| void bert::Fused_multihead_attention_params_v2::clear |

( |

| ) |

|

|

inline |

◆ qkv_ptr

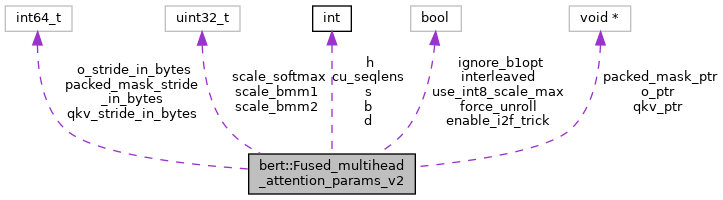

| void* bert::Fused_multihead_attention_params_v2::qkv_ptr |

◆ packed_mask_ptr

| void* bert::Fused_multihead_attention_params_v2::packed_mask_ptr |

◆ o_ptr

| void* bert::Fused_multihead_attention_params_v2::o_ptr |

◆ qkv_stride_in_bytes

| int64_t bert::Fused_multihead_attention_params_v2::qkv_stride_in_bytes |

◆ packed_mask_stride_in_bytes

| int64_t bert::Fused_multihead_attention_params_v2::packed_mask_stride_in_bytes |

◆ o_stride_in_bytes

| int64_t bert::Fused_multihead_attention_params_v2::o_stride_in_bytes |

| int bert::Fused_multihead_attention_params_v2::b |

| int bert::Fused_multihead_attention_params_v2::h |

| int bert::Fused_multihead_attention_params_v2::s |

| int bert::Fused_multihead_attention_params_v2::d |

◆ scale_bmm1

| uint32_t bert::Fused_multihead_attention_params_v2::scale_bmm1 |

◆ scale_softmax

| uint32_t bert::Fused_multihead_attention_params_v2::scale_softmax |

◆ scale_bmm2

| uint32_t bert::Fused_multihead_attention_params_v2::scale_bmm2 |

◆ enable_i2f_trick

| bool bert::Fused_multihead_attention_params_v2::enable_i2f_trick |

◆ cu_seqlens

| int* bert::Fused_multihead_attention_params_v2::cu_seqlens |

◆ interleaved

| bool bert::Fused_multihead_attention_params_v2::interleaved = false |

◆ ignore_b1opt

| bool bert::Fused_multihead_attention_params_v2::ignore_b1opt = false |

◆ force_unroll

| bool bert::Fused_multihead_attention_params_v2::force_unroll = false |

◆ use_int8_scale_max

| bool bert::Fused_multihead_attention_params_v2::use_int8_scale_max = false |

The documentation for this struct was generated from the following file:

- fused_multihead_attention_v2.h