Public Member Functions | |

| InstanceNormalizationPlugin (float epsilon, nvinfer1::Weights const &scale, nvinfer1::Weights const &bias) | |

| InstanceNormalizationPlugin (float epsilon, const std::vector< float > &scale, const std::vector< float > &bias) | |

| InstanceNormalizationPlugin (void const *serialData, size_t serialLength) | |

| InstanceNormalizationPlugin ()=delete | |

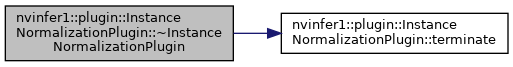

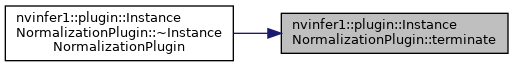

| ~InstanceNormalizationPlugin () override | |

| int | getNbOutputs () const override |

| Get the number of outputs from the layer. More... | |

| DimsExprs | getOutputDimensions (int outputIndex, const nvinfer1::DimsExprs *inputs, int nbInputs, nvinfer1::IExprBuilder &exprBuilder) override |

| int | initialize () override |

| Initialize the layer for execution. More... | |

| void | terminate () override |

| Release resources acquired during plugin layer initialization. More... | |

| size_t | getWorkspaceSize (const nvinfer1::PluginTensorDesc *inputs, int nbInputs, const nvinfer1::PluginTensorDesc *outputs, int nbOutputs) const override |

| int | enqueue (const nvinfer1::PluginTensorDesc *inputDesc, const nvinfer1::PluginTensorDesc *outputDesc, const void *const *inputs, void *const *outputs, void *workspace, cudaStream_t stream) override |

| Execute the layer. More... | |

| size_t | getSerializationSize () const override |

| Find the size of the serialization buffer required. More... | |

| void | serialize (void *buffer) const override |

| Serialize the layer. More... | |

| bool | supportsFormatCombination (int pos, const nvinfer1::PluginTensorDesc *inOut, int nbInputs, int nbOutputs) override |

| const char * | getPluginType () const override |

| Return the plugin type. More... | |

| const char * | getPluginVersion () const override |

| Return the plugin version. More... | |

| void | destroy () override |

| Destroy the plugin object. More... | |

| nvinfer1::IPluginV2DynamicExt * | clone () const override |

| Clone the plugin object. More... | |

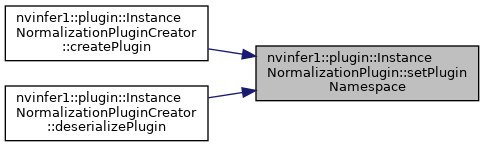

| void | setPluginNamespace (const char *pluginNamespace) override |

| Set the namespace that this plugin object belongs to. More... | |

| const char * | getPluginNamespace () const override |

| Return the namespace of the plugin object. More... | |

| DataType | getOutputDataType (int index, const nvinfer1::DataType *inputTypes, int nbInputs) const override |

| void | attachToContext (cudnnContext *cudnn, cublasContext *cublas, nvinfer1::IGpuAllocator *allocator) override |

| Attach the plugin object to an execution context and grant the plugin the access to some context resource. More... | |

| void | detachFromContext () override |

| Detach the plugin object from its execution context. More... | |

| void | configurePlugin (const nvinfer1::DynamicPluginTensorDesc *in, int nbInputs, const nvinfer1::DynamicPluginTensorDesc *out, int nbOutputs) override |

| virtual DimsExprs | getOutputDimensions (int32_t outputIndex, const DimsExprs *inputs, int32_t nbInputs, IExprBuilder &exprBuilder)=0 |

| Get expressions for computing dimensions of an output tensor from dimensions of the input tensors. More... | |

| virtual Dims | getOutputDimensions (int32_t index, const Dims *inputs, int32_t nbInputDims)=0 |

| Get the dimension of an output tensor. More... | |

| virtual bool | supportsFormatCombination (int32_t pos, const PluginTensorDesc *inOut, int32_t nbInputs, int32_t nbOutputs)=0 |

| Return true if plugin supports the format and datatype for the input/output indexed by pos. More... | |

| virtual void | configurePlugin (const DynamicPluginTensorDesc *in, int32_t nbInputs, const DynamicPluginTensorDesc *out, int32_t nbOutputs)=0 |

| Configure the layer. More... | |

| virtual void | configurePlugin (const Dims *inputDims, int32_t nbInputs, const Dims *outputDims, int32_t nbOutputs, const DataType *inputTypes, const DataType *outputTypes, const bool *inputIsBroadcast, const bool *outputIsBroadcast, PluginFormat floatFormat, int32_t maxBatchSize)=0 |

| Configure the layer with input and output data types. More... | |

| virtual size_t | getWorkspaceSize (const PluginTensorDesc *inputs, int32_t nbInputs, const PluginTensorDesc *outputs, int32_t nbOutputs) const =0 |

| Find the workspace size required by the layer. More... | |

| virtual size_t | getWorkspaceSize (int32_t maxBatchSize) const =0 |

| Find the workspace size required by the layer. More... | |

| virtual int32_t | enqueue (int32_t batchSize, const void *const *inputs, void **outputs, void *workspace, cudaStream_t stream)=0 |

| Execute the layer. More... | |

| virtual nvinfer1::DataType | getOutputDataType (int32_t index, const nvinfer1::DataType *inputTypes, int32_t nbInputs) const =0 |

| Return the DataType of the plugin output at the requested index. More... | |

| virtual bool | isOutputBroadcastAcrossBatch (int32_t outputIndex, const bool *inputIsBroadcasted, int32_t nbInputs) const =0 |

| Return true if output tensor is broadcast across a batch. More... | |

| virtual bool | canBroadcastInputAcrossBatch (int32_t inputIndex) const =0 |

| Return true if plugin can use input that is broadcast across batch without replication. More... | |

| virtual bool | supportsFormat (DataType type, PluginFormat format) const =0 |

| Check format support. More... | |

Static Public Attributes | |

| static constexpr int32_t | kFORMAT_COMBINATION_LIMIT = 100 |

| Limit on number of format combinations accepted. More... | |

Protected Member Functions | |

| int32_t | getTensorRTVersion () const |

| Return the API version with which this plugin was built. More... | |

| __attribute__ ((deprecated)) Dims getOutputDimensions(int32_t | |

| Derived classes should not implement this. More... | |

| __attribute__ ((deprecated)) bool isOutputBroadcastAcrossBatch(int32_t | |

| Derived classes should not implement this. More... | |

| __attribute__ ((deprecated)) bool canBroadcastInputAcrossBatch(int32_t) const | |

| Derived classes should not implement this. More... | |

| __attribute__ ((deprecated)) bool supportsFormat(DataType | |

| Derived classes should not implement this. More... | |

| __attribute__ ((deprecated)) void configurePlugin(const Dims * | |

| Derived classes should not implement this. More... | |

| void | configureWithFormat (const Dims *, int32_t, const Dims *, int32_t, DataType, PluginFormat, int32_t) |

| Derived classes should not implement this. More... | |

Protected Attributes | |

| const Dims | int32_t |

| const bool const | int32_t |

| int32_t | |

| const | PluginFormat |

| const Dims const DataType const DataType const bool const bool | PluginFormat |

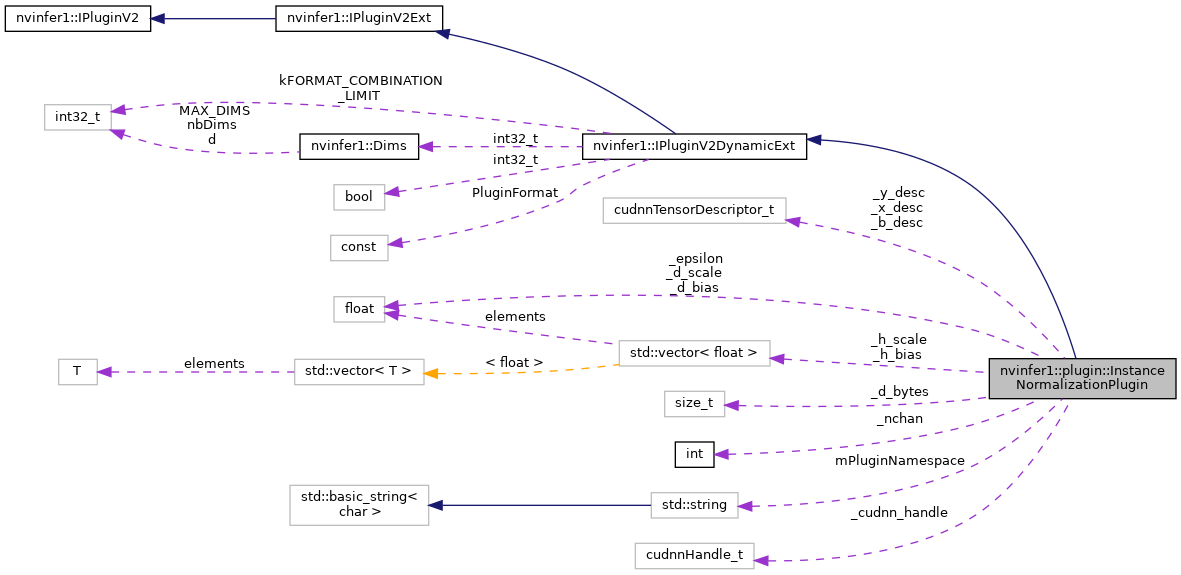

Private Attributes | |

| float | _epsilon |

| int | _nchan |

| std::vector< float > | _h_scale |

| std::vector< float > | _h_bias |

| float * | _d_scale |

| float * | _d_bias |

| size_t | _d_bytes |

| cudnnHandle_t | _cudnn_handle |

| cudnnTensorDescriptor_t | _x_desc |

| cudnnTensorDescriptor_t | _y_desc |

| cudnnTensorDescriptor_t | _b_desc |

| std::string | mPluginNamespace |

| InstanceNormalizationPlugin::InstanceNormalizationPlugin | ( | float | epsilon, |

| nvinfer1::Weights const & | scale, | ||

| nvinfer1::Weights const & | bias | ||

| ) |

| InstanceNormalizationPlugin::InstanceNormalizationPlugin | ( | float | epsilon, |

| const std::vector< float > & | scale, | ||

| const std::vector< float > & | bias | ||

| ) |

| InstanceNormalizationPlugin::InstanceNormalizationPlugin | ( | void const * | serialData, |

| size_t | serialLength | ||

| ) |

|

delete |

|

override |

|

overridevirtual |

Get the number of outputs from the layer.

This function is called by the implementations of INetworkDefinition and IBuilder. In particular, it is called prior to any call to initialize().

Implements nvinfer1::IPluginV2.

|

override |

|

overridevirtual |

Initialize the layer for execution.

This is called when the engine is created.

Implements nvinfer1::IPluginV2.

|

overridevirtual |

Release resources acquired during plugin layer initialization.

This is called when the engine is destroyed.

Implements nvinfer1::IPluginV2.

|

override |

|

overridevirtual |

Execute the layer.

| inputDesc | how to interpret the memory for the input tensors. |

| outputDesc | how to interpret the memory for the output tensors. |

| inputs | The memory for the input tensors. |

| outputs | The memory for the output tensors. |

| workspace | Workspace for execution. |

| stream | The stream in which to execute the kernels. |

Implements nvinfer1::IPluginV2DynamicExt.

|

overridevirtual |

Find the size of the serialization buffer required.

Implements nvinfer1::IPluginV2.

|

overridevirtual |

Serialize the layer.

| buffer | A pointer to a buffer to serialize data. Size of buffer must be equal to value returned by getSerializationSize. |

Implements nvinfer1::IPluginV2.

|

override |

|

overridevirtual |

Return the plugin type.

Should match the plugin name returned by the corresponding plugin creator

Implements nvinfer1::IPluginV2.

|

overridevirtual |

Return the plugin version.

Should match the plugin version returned by the corresponding plugin creator

Implements nvinfer1::IPluginV2.

|

overridevirtual |

Destroy the plugin object.

This will be called when the network, builder or engine is destroyed.

Implements nvinfer1::IPluginV2.

|

overridevirtual |

Clone the plugin object.

This copies over internal plugin parameters as well and returns a new plugin object with these parameters. If the source plugin is pre-configured with configurePlugin(), the returned object should also be pre-configured. The returned object should allow attachToContext() with a new execution context. Cloned plugin objects can share the same per-engine immutable resource (e.g. weights) with the source object (e.g. via ref-counting) to avoid duplication.

Implements nvinfer1::IPluginV2DynamicExt.

|

overridevirtual |

Set the namespace that this plugin object belongs to.

Ideally, all plugin objects from the same plugin library should have the same namespace.

Implements nvinfer1::IPluginV2.

|

overridevirtual |

Return the namespace of the plugin object.

Implements nvinfer1::IPluginV2.

|

override |

|

overridevirtual |

Attach the plugin object to an execution context and grant the plugin the access to some context resource.

| cudnn | The cudnn context handle of the execution context |

| cublas | The cublas context handle of the execution context |

| allocator | The allocator used by the execution context |

This function is called automatically for each plugin when a new execution context is created. If the plugin needs per-context resource, it can be allocated here. The plugin can also get context-owned CUDNN and CUBLAS context here.

Reimplemented from nvinfer1::IPluginV2Ext.

|

overridevirtual |

Detach the plugin object from its execution context.

This function is called automatically for each plugin when a execution context is destroyed. If the plugin owns per-context resource, it can be released here.

Reimplemented from nvinfer1::IPluginV2Ext.

|

override |

|

pure virtualinherited |

Get expressions for computing dimensions of an output tensor from dimensions of the input tensors.

| outputIndex | The index of the output tensor |

| inputs | Expressions for dimensions of the input tensors |

| nbInputDims | The number of input tensors |

| exprBuilder | Object for generating new expressions |

This function is called by the implementations of IBuilder during analysis of the network.

Example #1: A plugin has a single output that transposes the last two dimensions of the plugin's single input. The body of the override of getOutputDimensions can be:

DimsExprs output(inputs[0]); std::swap(output.d[output.nbDims-1], output.d[output.nbDims-2]); return output;

Example #2: A plugin concatenates its two inputs along the first dimension. The body of the override of getOutputDimensions can be:

DimsExprs output(inputs[0]); output.d[0] = exprBuilder.operation(DimensionOperation::kSUM, *inputs[0].d[0], *inputs[1].d[0]); return output;

|

pure virtualinherited |

Get the dimension of an output tensor.

| index | The index of the output tensor. |

| inputs | The input tensors. |

| nbInputDims | The number of input tensors. |

This function is called by the implementations of INetworkDefinition and IBuilder. In particular, it is called prior to any call to initialize().

|

pure virtualinherited |

Return true if plugin supports the format and datatype for the input/output indexed by pos.

For this method inputs are numbered 0..(nbInputs-1) and outputs are numbered nbInputs..(nbInputs+nbOutputs-1). Using this numbering, pos is an index into InOut, where 0 <= pos < nbInputs+nbOutputs-1.

TensorRT invokes this method to ask if the input/output indexed by pos supports the format/datatype specified by inOut[pos].format and inOut[pos].type. The override should return true if that format/datatype at inOut[pos] are supported by the plugin. If support is conditional on other input/output formats/datatypes, the plugin can make its result conditional on the formats/datatypes in inOut[0..pos-1], which will be set to values that the plugin supports. The override should not inspect inOut[pos+1..nbInputs+nbOutputs-1], which will have invalid values. In other words, the decision for pos must be based on inOut[0..pos] only.

Some examples:

return inOut.format[pos] == TensorFormat::kLINEAR && inOut.type[pos] == DataType::kHALF;

return inOut.format[pos] == TensorFormat::kLINEAR && (inOut.type[pos] == pos < 2 ? DataType::kHALF : DataType::kFLOAT);

return pos == 0 || (inOut.format[pos] == inOut.format[0] && inOut.type[pos] == inOut.type[0]);

Warning: TensorRT will stop asking for formats once it finds kFORMAT_COMBINATION_LIMIT on combinations.

|

pure virtualinherited |

Configure the layer.

This function is called by the builder prior to initialize(). It provides an opportunity for the layer to make algorithm choices on the basis of bounds on the input and output tensors, and the target value.

This function is also called once when the resource requirements are changed based on the optimization profiles.

| in | The input tensors attributes that are used for configuration. |

| nbInputs | Number of input tensors. |

| out | The output tensors attributes that are used for configuration. |

| nbOutputs | Number of output tensors. |

|

pure virtualinherited |

Configure the layer with input and output data types.

This function is called by the builder prior to initialize(). It provides an opportunity for the layer to make algorithm choices on the basis of its weights, dimensions, data types and maximum batch size.

| inputDims | The input tensor dimensions. |

| nbInputs | The number of inputs. |

| outputDims | The output tensor dimensions. |

| nbOutputs | The number of outputs. |

| inputTypes | The data types selected for the plugin inputs. |

| outputTypes | The data types selected for the plugin outputs. |

| inputIsBroadcast | True for each input that the plugin must broadcast across the batch. |

| outputIsBroadcast | True for each output that TensorRT will broadcast across the batch. |

| floatFormat | The format selected for the engine for the floating point inputs/outputs. |

| maxBatchSize | The maximum batch size. |

The dimensions passed here do not include the outermost batch size (i.e. for 2-D image networks, they will be 3-dimensional CHW dimensions). When inputIsBroadcast or outputIsBroadcast is true, the outermost batch size for that input or output should be treated as if it is one. inputIsBroadcast[i] is true only if the input is semantically broadcast across the batch and canBroadcastInputAcrossBatch(i) returned true. outputIsBroadcast[i] is true only if isOutputBroadcastAcrossBatch(i) returned true.

|

pure virtualinherited |

Find the workspace size required by the layer.

This function is called after the plugin is configured, and possibly during execution. The result should be a sufficient workspace size to deal with inputs and outputs of the given size or any smaller problem.

|

pure virtualinherited |

Find the workspace size required by the layer.

This function is called during engine startup, after initialize(). The workspace size returned should be sufficient for any batch size up to the maximum.

|

pure virtualinherited |

Execute the layer.

| batchSize | The number of inputs in the batch. |

| inputs | The memory for the input tensors. |

| outputs | The memory for the output tensors. |

| workspace | Workspace for execution. |

| stream | The stream in which to execute the kernels. |

|

inlineprotectedvirtualinherited |

Return the API version with which this plugin was built.

Do not override this method as it is used by the TensorRT library to maintain backwards-compatibility with plugins.

Reimplemented from nvinfer1::IPluginV2.

|

protectedinherited |

Derived classes should not implement this.

In a C++11 API it would be override final.

Instead, derived classes should override the overload of getOutputDimensions that returns DimsExprs.

|

protectedinherited |

Derived classes should not implement this.

In a C++11 API it would be override final.

This method is not used because with dynamic shapes there is no implicit batch dimension to broadcast across.

|

inlineprotectedinherited |

Derived classes should not implement this.

In a C++11 API it would be override final.

This method is not used because with dynamic shapes there is no implicit batch dimension to broadcast across.

|

protectedinherited |

Derived classes should not implement this.

In a C++11 API it would be override final.

This method is not used because it does not allow a plugin to specify mixed formats.

Instead, derived classes should override supportsFormatCombination, which allows plugins to express mixed formats.

|

protectedinherited |

Derived classes should not implement this.

In a C++11 API it would be override final.

This method is not used because tensors with dynamic shapes do not have an implicit batch dimension, input dimensions might be variable, and outputs might have different floating-point formats.

Instead, derived classes should override the overload of configurePlugin that takes poiners to DynamicPluginTensorDesc.

|

pure virtualinherited |

Return the DataType of the plugin output at the requested index.

The default behavior should be to return the type of the first input, or DataType::kFLOAT if the layer has no inputs. The returned data type must have a format that is supported by the plugin.

|

pure virtualinherited |

Return true if output tensor is broadcast across a batch.

| outputIndex | The index of the output |

| inputIsBroadcasted | The ith element is true if the tensor for the ith input is broadcast across a batch. |

| nbInputs | The number of inputs |

The values in inputIsBroadcasted refer to broadcasting at the semantic level, i.e. are unaffected by whether method canBroadcastInputAcrossBatch requests physical replication of the values.

|

pure virtualinherited |

Return true if plugin can use input that is broadcast across batch without replication.

| inputIndex | Index of input that could be broadcast. |

For each input whose tensor is semantically broadcast across a batch, TensorRT calls this method before calling configurePlugin. If canBroadcastInputAcrossBatch returns true, TensorRT will not replicate the input tensor; i.e., there will be a single copy that the plugin should share across the batch. If it returns false, TensorRT will replicate the input tensor so that it appears like a non-broadcasted tensor.

This method is called only for inputs that can be broadcast.

|

inlineprotectedvirtualinherited |

Derived classes should not implement this.

In a C++11 API it would be override final.

Implements nvinfer1::IPluginV2.

|

pure virtualinherited |

Check format support.

| type | DataType requested. |

| format | PluginFormat requested. |

This function is called by the implementations of INetworkDefinition, IBuilder, and safe::ICudaEngine/ICudaEngine. In particular, it is called when creating an engine and when deserializing an engine.

Implemented in nvinfer1::plugin::FlattenConcat, nvinfer1::plugin::ProposalPlugin, nvinfer1::plugin::CropAndResizePlugin, nvinfer1::plugin::SpecialSlice, nvinfer1::plugin::ProposalLayer, nvinfer1::plugin::BatchTilePlugin, nvinfer1::plugin::CoordConvACPlugin, nvinfer1::plugin::GenerateDetection, nvinfer1::plugin::MultilevelCropAndResize, nvinfer1::plugin::PyramidROIAlign, nvinfer1::plugin::DetectionLayer, nvinfer1::plugin::MultilevelProposeROI, nvinfer1::plugin::ResizeNearest, nvinfer1::plugin::LReLU, nvinfer1::plugin::Normalize, nvinfer1::plugin::DetectionOutput, nvinfer1::plugin::RPROIPlugin, nvinfer1::plugin::PriorBox, nvinfer1::plugin::Region, nvinfer1::plugin::Reorg, nvinfer1::plugin::GridAnchorGenerator, and nvinfer1::plugin::BatchedNMSPlugin.

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |

|

private |

|

staticconstexprinherited |

Limit on number of format combinations accepted.

|

protectedinherited |

|

protectedinherited |

|

protectedinherited |

|

protectedinherited |

|

protectedinherited |