Classes | |

| class | BertConfig |

Functions | |

| def | set_tensor_name (tensor, prefix, name) |

| def | set_output_name (layer, prefix, name, out_idx=0) |

| def | set_output_range (layer, maxval, out_idx=0) |

| def | get_mha_dtype (config) |

| def | attention_layer_opt (prefix, config, init_dict, network, input_tensor, imask) |

| def | skipln (prefix, config, init_dict, network, input_tensor, skip, bias=None) |

| def | custom_fc (config, network, input_tensor, out_dims, W) |

| def | transformer_layer_opt (prefix, config, init_dict, network, input_tensor, imask) |

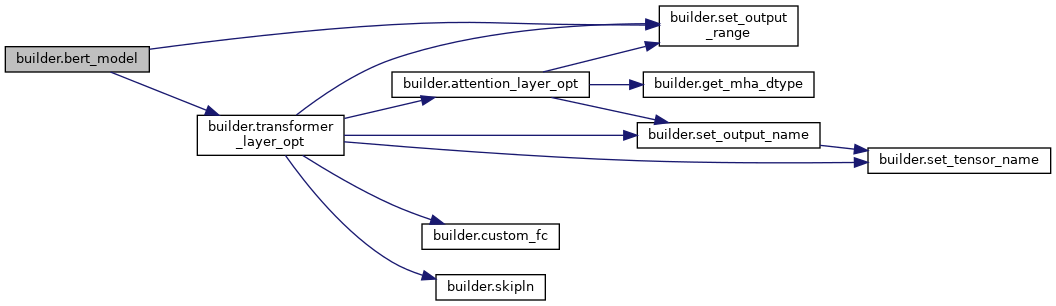

| def | bert_model (config, init_dict, network, input_tensor, input_mask) |

| def | squad_output (prefix, config, init_dict, network, input_tensor) |

| def | load_tf_weights (inputbase, config) |

| def | onnx_to_trt_name (onnx_name) |

| def | load_onnx_weights_and_quant (path, config) |

| def | emb_layernorm (builder, network, config, weights_dict, builder_config, sequence_lengths, batch_sizes) |

| def | build_engine (batch_sizes, workspace_size, sequence_lengths, config, weights_dict, squad_json, vocab_file, calibrationCacheFile, calib_num) |

| def | generate_calibration_cache (sequence_lengths, workspace_size, config, weights_dict, squad_json, vocab_file, calibrationCacheFile, calib_num) |

| def | main () |

Variables | |

| TRT_LOGGER = trt.Logger(trt.Logger.INFO) | |

| handle = ctypes.CDLL("libnvinfer_plugin.so", mode=ctypes.RTLD_GLOBAL) | |

| plg_registry = trt.get_plugin_registry() | |

| emln_plg_creator = plg_registry.get_plugin_creator("CustomEmbLayerNormPluginDynamic", "1", "") | |

| qkv2_plg_creator = plg_registry.get_plugin_creator("CustomQKVToContextPluginDynamic", "1", "") | |

| skln_plg_creator = plg_registry.get_plugin_creator("CustomSkipLayerNormPluginDynamic", "1", "") | |

| fc_plg_creator = plg_registry.get_plugin_creator("CustomFCPluginDynamic", "1", "") | |

| string | WQ = "self_query_kernel" |

| string | BQ = "self_query_bias" |

| string | WK = "self_key_kernel" |

| string | BK = "self_key_bias" |

| string | WV = "self_value_kernel" |

| string | BV = "self_value_bias" |

| string | WQKV = "self_qkv_kernel" |

| string | BQKV = "self_qkv_bias" |

| string | W_AOUT = "attention_output_dense_kernel" |

| string | B_AOUT = "attention_output_dense_bias" |

| string | AOUT_LN_BETA = "attention_output_layernorm_beta" |

| string | AOUT_LN_GAMMA = "attention_output_layernorm_gamma" |

| string | W_MID = "intermediate_dense_kernel" |

| string | B_MID = "intermediate_dense_bias" |

| string | W_LOUT = "output_dense_kernel" |

| string | B_LOUT = "output_dense_bias" |

| string | LOUT_LN_BETA = "output_layernorm_beta" |

| string | LOUT_LN_GAMMA = "output_layernorm_gamma" |

| string | SQD_W = "squad_output_weights" |

| string | SQD_B = "squad_output_bias" |

| def builder.set_tensor_name | ( | tensor, | |

| prefix, | |||

| name | |||

| ) |

| def builder.set_output_name | ( | layer, | |

| prefix, | |||

| name, | |||

out_idx = 0 |

|||

| ) |

| def builder.set_output_range | ( | layer, | |

| maxval, | |||

out_idx = 0 |

|||

| ) |

| def builder.get_mha_dtype | ( | config | ) |

| def builder.attention_layer_opt | ( | prefix, | |

| config, | |||

| init_dict, | |||

| network, | |||

| input_tensor, | |||

| imask | |||

| ) |

Add the attention layer

| def builder.skipln | ( | prefix, | |

| config, | |||

| init_dict, | |||

| network, | |||

| input_tensor, | |||

| skip, | |||

bias = None |

|||

| ) |

Add the skip layer

| def builder.custom_fc | ( | config, | |

| network, | |||

| input_tensor, | |||

| out_dims, | |||

| W | |||

| ) |

| def builder.transformer_layer_opt | ( | prefix, | |

| config, | |||

| init_dict, | |||

| network, | |||

| input_tensor, | |||

| imask | |||

| ) |

Add the transformer layer

| def builder.bert_model | ( | config, | |

| init_dict, | |||

| network, | |||

| input_tensor, | |||

| input_mask | |||

| ) |

Create the bert model

| def builder.squad_output | ( | prefix, | |

| config, | |||

| init_dict, | |||

| network, | |||

| input_tensor | |||

| ) |

Create the squad output

| def builder.load_tf_weights | ( | inputbase, | |

| config | |||

| ) |

Load the weights from the tensorflow checkpoint

| def builder.onnx_to_trt_name | ( | onnx_name | ) |

Converting variables in the onnx checkpoint to names corresponding to the naming convention used in the TF version, expected by the builder

| def builder.load_onnx_weights_and_quant | ( | path, | |

| config | |||

| ) |

Load the weights from the onnx checkpoint

| def builder.emb_layernorm | ( | builder, | |

| network, | |||

| config, | |||

| weights_dict, | |||

| builder_config, | |||

| sequence_lengths, | |||

| batch_sizes | |||

| ) |

| def builder.build_engine | ( | batch_sizes, | |

| workspace_size, | |||

| sequence_lengths, | |||

| config, | |||

| weights_dict, | |||

| squad_json, | |||

| vocab_file, | |||

| calibrationCacheFile, | |||

| calib_num | |||

| ) |

| def builder.generate_calibration_cache | ( | sequence_lengths, | |

| workspace_size, | |||

| config, | |||

| weights_dict, | |||

| squad_json, | |||

| vocab_file, | |||

| calibrationCacheFile, | |||

| calib_num | |||

| ) |

BERT demo needs a separate engine building path to generate calibration cache. This is because we need to configure SLN and MHA plugins in FP32 mode when generating calibration cache, and INT8 mode when building the actual engine. This cache could be generated by examining certain training data and can be reused across different configurations.

| def builder.main | ( | ) |

| builder.TRT_LOGGER = trt.Logger(trt.Logger.INFO) |

| builder.handle = ctypes.CDLL("libnvinfer_plugin.so", mode=ctypes.RTLD_GLOBAL) |

| builder.plg_registry = trt.get_plugin_registry() |

| builder.emln_plg_creator = plg_registry.get_plugin_creator("CustomEmbLayerNormPluginDynamic", "1", "") |

| builder.qkv2_plg_creator = plg_registry.get_plugin_creator("CustomQKVToContextPluginDynamic", "1", "") |

| builder.skln_plg_creator = plg_registry.get_plugin_creator("CustomSkipLayerNormPluginDynamic", "1", "") |

| builder.fc_plg_creator = plg_registry.get_plugin_creator("CustomFCPluginDynamic", "1", "") |

| string builder.WQ = "self_query_kernel" |

| string builder.BQ = "self_query_bias" |

| string builder.WK = "self_key_kernel" |

| string builder.BK = "self_key_bias" |

| string builder.WV = "self_value_kernel" |

| string builder.BV = "self_value_bias" |

| string builder.WQKV = "self_qkv_kernel" |

| string builder.BQKV = "self_qkv_bias" |

| string builder.W_AOUT = "attention_output_dense_kernel" |

| string builder.B_AOUT = "attention_output_dense_bias" |

| string builder.AOUT_LN_BETA = "attention_output_layernorm_beta" |

| string builder.AOUT_LN_GAMMA = "attention_output_layernorm_gamma" |

| string builder.W_MID = "intermediate_dense_kernel" |

| string builder.B_MID = "intermediate_dense_bias" |

| string builder.W_LOUT = "output_dense_kernel" |

| string builder.B_LOUT = "output_dense_bias" |

| string builder.LOUT_LN_BETA = "output_layernorm_beta" |

| string builder.LOUT_LN_GAMMA = "output_layernorm_gamma" |

| string builder.SQD_W = "squad_output_weights" |

| string builder.SQD_B = "squad_output_bias" |