Classes | |

| struct | AllOptions |

| struct | BaseModelOptions |

| struct | Binding |

| class | Bindings |

| struct | BuildOptions |

| struct | DeviceAllocator |

| struct | DeviceDeallocator |

| struct | HostAllocator |

| struct | HostDeallocator |

| struct | InferenceEnvironment |

| struct | InferenceOptions |

| struct | InferenceTime |

| Measurement times in milliseconds. More... | |

| struct | InferenceTrace |

| Measurement points in milliseconds. More... | |

| struct | LayerProfile |

| Layer profile information. More... | |

| class | Logger |

| Class which manages logging of TensorRT tools and samples. More... | |

| class | LogStreamConsumer |

| Convenience object used to facilitate use of C++ stream syntax when logging messages. Order of base classes is LogStreamConsumerBase and then std::ostream. This is because the LogStreamConsumerBase class is used to initialize the LogStreamConsumerBuffer member field in LogStreamConsumer and then the address of the buffer is passed to std::ostream. This is necessary to prevent the address of an uninitialized buffer from being passed to std::ostream. Please do not change the order of the parent classes. More... | |

| class | LogStreamConsumerBase |

| Convenience object used to initialize LogStreamConsumerBuffer before std::ostream in LogStreamConsumer. More... | |

| class | LogStreamConsumerBuffer |

| class | MirroredBuffer |

| Coupled host and device buffers. More... | |

| struct | ModelOptions |

| struct | Options |

| struct | Parser |

| class | Profiler |

| Collect per-layer profile information, assuming times are reported in the same order. More... | |

| struct | ReportingOptions |

| struct | SystemOptions |

| class | TrtCudaBuffer |

| Managed buffer for host and device. More... | |

| class | TrtCudaEvent |

| Managed CUDA event. More... | |

| class | TrtCudaGraph |

| Managed CUDA graph. More... | |

| class | TrtCudaStream |

| Managed CUDA stream. More... | |

| struct | TrtDestroyer |

| struct | UffInput |

Typedefs | |

| using | Severity = nvinfer1::ILogger::Severity |

| using | TrtDeviceBuffer = TrtCudaBuffer< DeviceAllocator, DeviceDeallocator > |

| using | TrtHostBuffer = TrtCudaBuffer< HostAllocator, HostDeallocator > |

| using | Arguments = std::unordered_multimap< std::string, std::string > |

| using | IOFormat = std::pair< nvinfer1::DataType, nvinfer1::TensorFormats > |

| using | ShapeRange = std::array< std::vector< int >, nvinfer1::EnumMax< nvinfer1::OptProfileSelector >()> |

| template<typename T > | |

| using | TrtUniquePtr = std::unique_ptr< T, TrtDestroyer< T > > |

Enumerations | |

| enum | ModelFormat { ModelFormat::kANY, ModelFormat::kCAFFE, ModelFormat::kONNX, ModelFormat::kUFF } |

Functions | |

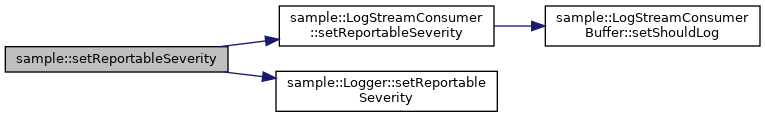

| void | setReportableSeverity (Logger::Severity severity) |

| void | cudaCheck (cudaError_t ret, std::ostream &err=std::cerr) |

| void | setCudaDevice (int device, std::ostream &os) |

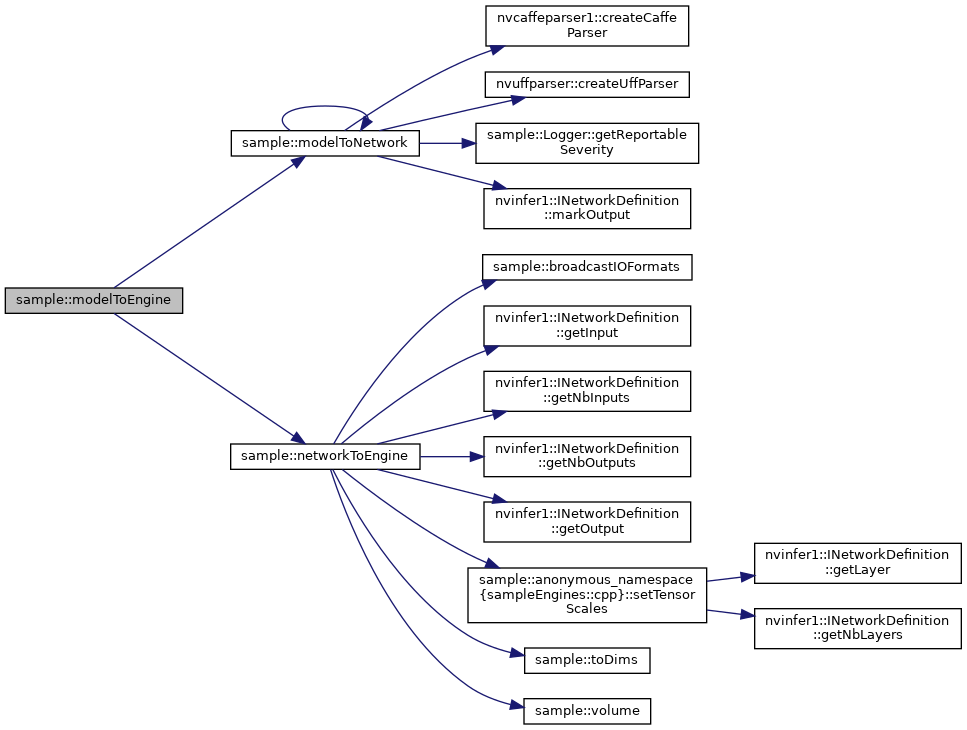

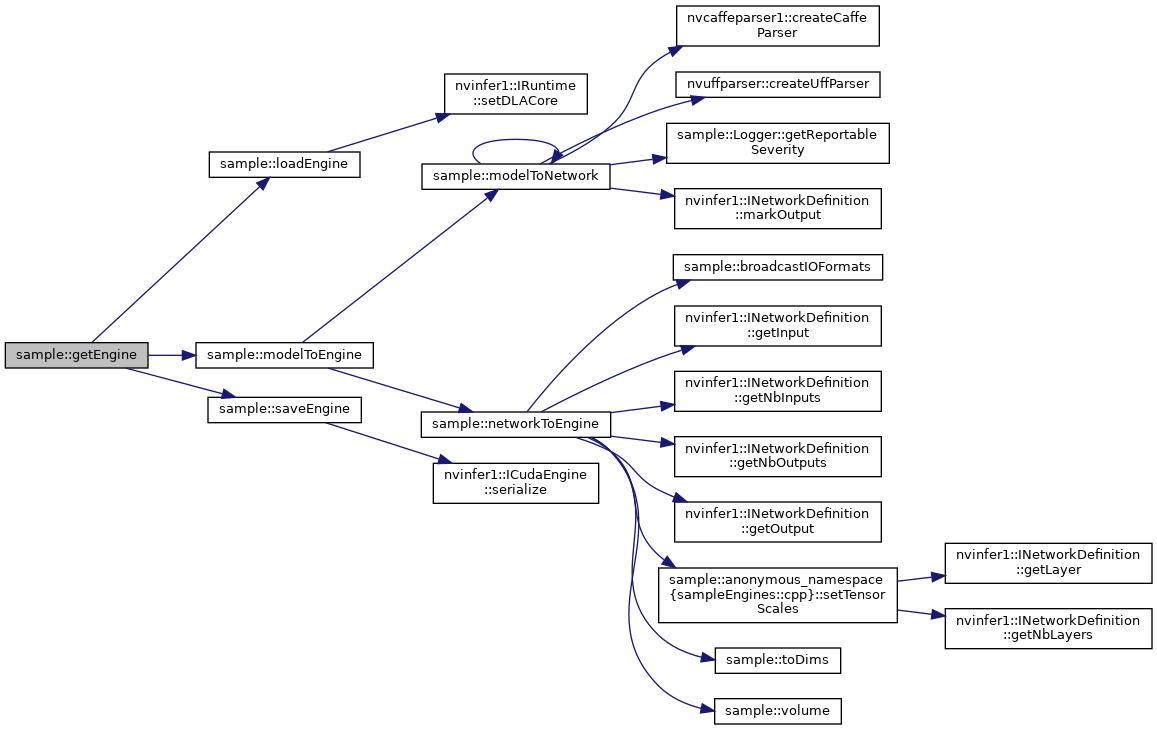

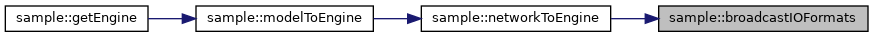

| Parser | modelToNetwork (const ModelOptions &model, nvinfer1::INetworkDefinition &network, std::ostream &err) |

| Generate a network definition for a given model. More... | |

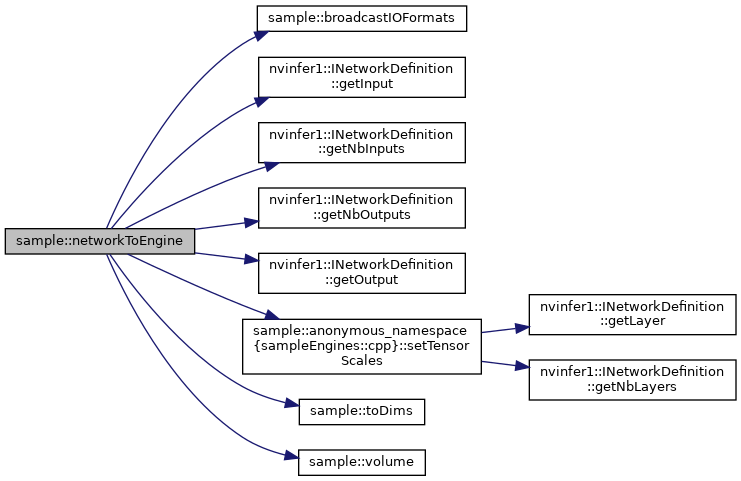

| ICudaEngine * | networkToEngine (const BuildOptions &build, const SystemOptions &sys, nvinfer1::IBuilder &builder, nvinfer1::INetworkDefinition &network, std::ostream &err) |

| Create an engine for a network defintion. More... | |

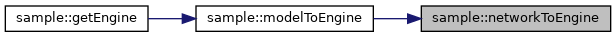

| ICudaEngine * | modelToEngine (const ModelOptions &model, const BuildOptions &build, const SystemOptions &sys, std::ostream &err) |

| Create an engine for a given model. More... | |

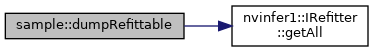

| void | dumpRefittable (nvinfer1::ICudaEngine &engine) |

| Log refittable layers and weights of a refittable engine. More... | |

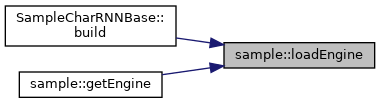

| ICudaEngine * | loadEngine (const std::string &engine, int DLACore, std::ostream &err) |

| Load a serialized engine. More... | |

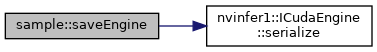

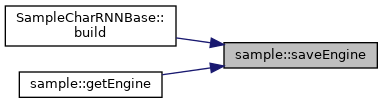

| bool | saveEngine (const nvinfer1::ICudaEngine &engine, const std::string &fileName, std::ostream &err) |

| Save an engine into a file. More... | |

| TrtUniquePtr< nvinfer1::ICudaEngine > | getEngine (const ModelOptions &model, const BuildOptions &build, const SystemOptions &sys, std::ostream &err) |

| Create an engine from model or serialized file, and optionally save engine. More... | |

| bool | setUpInference (InferenceEnvironment &iEnv, const InferenceOptions &inference) |

| Set up contexts and bindings for inference. More... | |

| void | runInference (const InferenceOptions &inference, InferenceEnvironment &iEnv, int device, std::vector< InferenceTrace > &trace) |

| Run inference and collect timing. More... | |

| Arguments | argsToArgumentsMap (int argc, char *argv[]) |

| bool | parseHelp (Arguments &arguments) |

| std::ostream & | operator<< (std::ostream &os, const BaseModelOptions &options) |

| std::ostream & | operator<< (std::ostream &os, const UffInput &input) |

| std::ostream & | operator<< (std::ostream &os, const ModelOptions &options) |

| std::ostream & | operator<< (std::ostream &os, const IOFormat &format) |

| std::ostream & | operator<< (std::ostream &os, const ShapeRange &dims) |

| std::ostream & | operator<< (std::ostream &os, const BuildOptions &options) |

| std::ostream & | operator<< (std::ostream &os, const SystemOptions &options) |

| std::ostream & | operator<< (std::ostream &os, const InferenceOptions &options) |

| std::ostream & | operator<< (std::ostream &os, const ReportingOptions &options) |

| std::ostream & | operator<< (std::ostream &os, const AllOptions &options) |

| void | helpHelp (std::ostream &os) |

| void | printProlog (int warmups, int timings, float warmupMs, float walltime, std::ostream &os) |

| Print benchmarking time and number of traces collected. More... | |

| void | printTiming (const std::vector< InferenceTime > &timings, int runsPerAvg, std::ostream &os) |

| Print a timing trace. More... | |

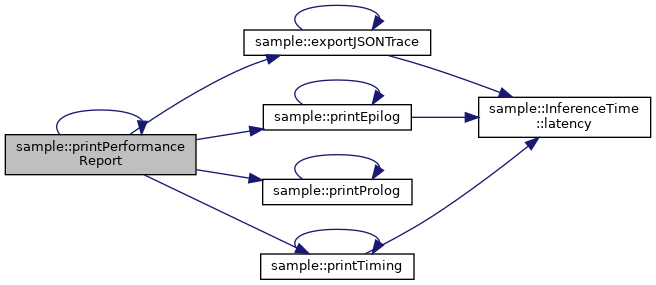

| void | printEpilog (std::vector< InferenceTime > timings, float walltimeMs, float percentile, int queries, std::ostream &os) |

| void | printPerformanceReport (const std::vector< InferenceTrace > &trace, const ReportingOptions &reporting, float warmupMs, int queries, std::ostream &os) |

| Print and summarize a timing trace. More... | |

| void | exportJSONTrace (const std::vector< InferenceTrace > &trace, const std::string &fileName) |

| Printed format: [ value, ...] value ::= { "start enq : time, "end enq" : time, "start in" : time, "end in" : time, "start compute" : time, "end compute" : time,

"start out" : time, "in" : time, "compute" : time, "out" : time, "latency" : time, "end to end" : time}. More... | |

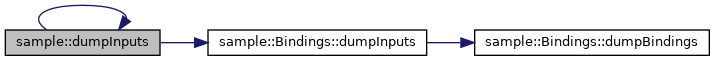

| void | dumpInputs (const nvinfer1::IExecutionContext &context, const Bindings &bindings, std::ostream &os) |

| Print input tensors to stream. More... | |

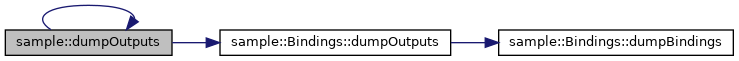

| void | dumpOutputs (const nvinfer1::IExecutionContext &context, const Bindings &bindings, std::ostream &os) |

| Print output tensors to stream. More... | |

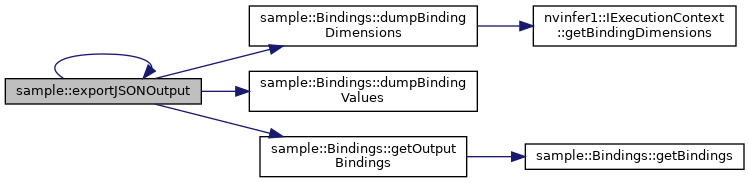

| void | exportJSONOutput (const nvinfer1::IExecutionContext &context, const Bindings &bindings, const std::string &fileName) |

| Export output tensors to JSON file. More... | |

| InferenceTime | operator+ (const InferenceTime &a, const InferenceTime &b) |

| InferenceTime | operator+= (InferenceTime &a, const InferenceTime &b) |

| void | printEpilog (std::vector< InferenceTime > timings, float percentile, int queries, std::ostream &os) |

| Print the performance summary of a trace. More... | |

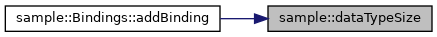

| int | dataTypeSize (nvinfer1::DataType dataType) |

| template<typename T > | |

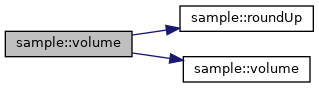

| T | roundUp (T m, T n) |

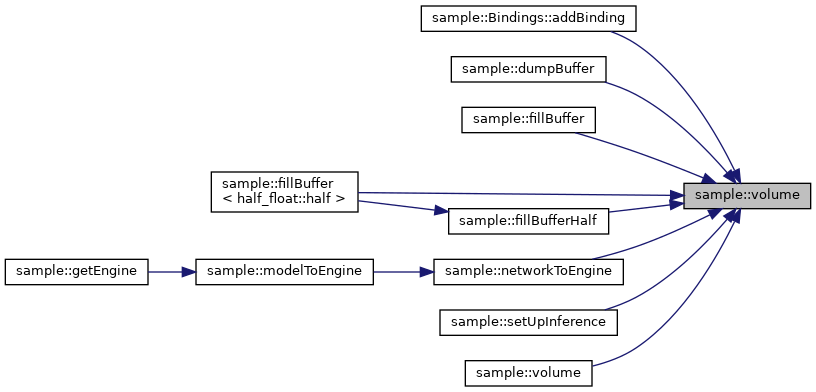

| int | volume (const nvinfer1::Dims &d) |

| int | volume (const nvinfer1::Dims &dims, const nvinfer1::Dims &strides, int vecDim, int comps, int batch) |

| int | volume (nvinfer1::Dims dims, int vecDim, int comps, int batch) |

| std::ostream & | operator<< (std::ostream &os, const nvinfer1::Dims &dims) |

| std::ostream & | operator<< (std::ostream &os, const std::vector< int > &vec) |

| std::ostream & | operator<< (std::ostream &os, const nvinfer1::WeightsRole role) |

| nvinfer1::Dims | toDims (const std::vector< int > &vec) |

| template<typename T > | |

| void | fillBuffer (void *buffer, int volume, T min, T max) |

| template<typename H > | |

| void | fillBufferHalf (void *buffer, int volume, H min, H max) |

| template<> | |

| void | fillBuffer< half_float::half > (void *buffer, int volume, half_float::half min, half_float::half max) |

| template<typename T > | |

| void | dumpBuffer (const void *buffer, int volume, const std::string &separator, std::ostream &os) |

| bool | broadcastIOFormats (const std::vector< IOFormat > &formats, size_t nbBindings, bool isInput=true) |

Variables | |

| Logger | gLogger {Logger::Severity::kINFO} |

| LogStreamConsumer | gLogVerbose {LOG_VERBOSE(gLogger)} |

| LogStreamConsumer | gLogInfo {LOG_INFO(gLogger)} |

| LogStreamConsumer | gLogWarning {LOG_WARN(gLogger)} |

| LogStreamConsumer | gLogError {LOG_ERROR(gLogger)} |

| LogStreamConsumer | gLogFatal {LOG_FATAL(gLogger)} |

| constexpr int | defaultMaxBatch {1} |

| constexpr int | defaultWorkspace {16} |

| constexpr int | defaultMinTiming {1} |

| constexpr int | defaultAvgTiming {8} |

| constexpr int | defaultDevice {0} |

| constexpr int | defaultBatch {1} |

| constexpr int | defaultStreams {1} |

| constexpr int | defaultIterations {10} |

| constexpr int | defaultWarmUp {200} |

| constexpr int | defaultDuration {3} |

| constexpr int | defaultSleep {0} |

| constexpr int | defaultAvgRuns {10} |

| constexpr float | defaultPercentile {99} |

| using sample::Severity = typedef nvinfer1::ILogger::Severity |

| using sample::TrtDeviceBuffer = typedef TrtCudaBuffer<DeviceAllocator, DeviceDeallocator> |

| using sample::TrtHostBuffer = typedef TrtCudaBuffer<HostAllocator, HostDeallocator> |

| using sample::Arguments = typedef std::unordered_multimap<std::string, std::string> |

| using sample::IOFormat = typedef std::pair<nvinfer1::DataType, nvinfer1::TensorFormats> |

| using sample::ShapeRange = typedef std::array<std::vector<int>, nvinfer1::EnumMax<nvinfer1::OptProfileSelector>()> |

| using sample::TrtUniquePtr = typedef std::unique_ptr<T, TrtDestroyer<T> > |

|

strong |

| void sample::setReportableSeverity | ( | Logger::Severity | severity | ) |

|

inline |

|

inline |

| Parser sample::modelToNetwork | ( | const ModelOptions & | model, |

| nvinfer1::INetworkDefinition & | network, | ||

| std::ostream & | err | ||

| ) |

Generate a network definition for a given model.

| nvinfer1::ICudaEngine * sample::networkToEngine | ( | const BuildOptions & | build, |

| const SystemOptions & | sys, | ||

| nvinfer1::IBuilder & | builder, | ||

| nvinfer1::INetworkDefinition & | network, | ||

| std::ostream & | err | ||

| ) |

Create an engine for a network defintion.

| nvinfer1::ICudaEngine * sample::modelToEngine | ( | const ModelOptions & | model, |

| const BuildOptions & | build, | ||

| const SystemOptions & | sys, | ||

| std::ostream & | err | ||

| ) |

Create an engine for a given model.

| void sample::dumpRefittable | ( | nvinfer1::ICudaEngine & | engine | ) |

Log refittable layers and weights of a refittable engine.

| nvinfer1::ICudaEngine * sample::loadEngine | ( | const std::string & | engine, |

| int | DLACore, | ||

| std::ostream & | err | ||

| ) |

Load a serialized engine.

| bool sample::saveEngine | ( | const nvinfer1::ICudaEngine & | engine, |

| const std::string & | fileName, | ||

| std::ostream & | err | ||

| ) |

Save an engine into a file.

| TrtUniquePtr< nvinfer1::ICudaEngine > sample::getEngine | ( | const ModelOptions & | model, |

| const BuildOptions & | build, | ||

| const SystemOptions & | sys, | ||

| std::ostream & | err | ||

| ) |

Create an engine from model or serialized file, and optionally save engine.

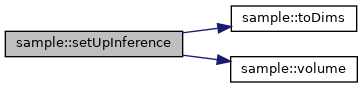

| bool sample::setUpInference | ( | InferenceEnvironment & | iEnv, |

| const InferenceOptions & | inference | ||

| ) |

Set up contexts and bindings for inference.

| void sample::runInference | ( | const InferenceOptions & | inference, |

| InferenceEnvironment & | iEnv, | ||

| int | device, | ||

| std::vector< InferenceTrace > & | trace | ||

| ) |

Run inference and collect timing.

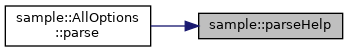

| bool sample::parseHelp | ( | Arguments & | arguments | ) |

| std::ostream & sample::operator<< | ( | std::ostream & | os, |

| const BaseModelOptions & | options | ||

| ) |

| std::ostream & sample::operator<< | ( | std::ostream & | os, |

| const UffInput & | input | ||

| ) |

| std::ostream & sample::operator<< | ( | std::ostream & | os, |

| const ModelOptions & | options | ||

| ) |

| std::ostream & sample::operator<< | ( | std::ostream & | os, |

| const IOFormat & | format | ||

| ) |

| std::ostream & sample::operator<< | ( | std::ostream & | os, |

| const ShapeRange & | dims | ||

| ) |

| std::ostream & sample::operator<< | ( | std::ostream & | os, |

| const BuildOptions & | options | ||

| ) |

| std::ostream & sample::operator<< | ( | std::ostream & | os, |

| const SystemOptions & | options | ||

| ) |

| std::ostream & sample::operator<< | ( | std::ostream & | os, |

| const InferenceOptions & | options | ||

| ) |

| std::ostream & sample::operator<< | ( | std::ostream & | os, |

| const ReportingOptions & | options | ||

| ) |

| std::ostream & sample::operator<< | ( | std::ostream & | os, |

| const AllOptions & | options | ||

| ) |

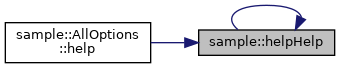

| void sample::helpHelp | ( | std::ostream & | os | ) |

| void sample::printProlog | ( | int | warmups, |

| int | timings, | ||

| float | warmupMs, | ||

| float | benchTimeMs, | ||

| std::ostream & | os | ||

| ) |

Print benchmarking time and number of traces collected.

| void sample::printTiming | ( | const std::vector< InferenceTime > & | timings, |

| int | runsPerAvg, | ||

| std::ostream & | os | ||

| ) |

Print a timing trace.

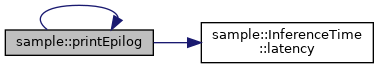

| void sample::printEpilog | ( | std::vector< InferenceTime > | timings, |

| float | walltimeMs, | ||

| float | percentile, | ||

| int | queries, | ||

| std::ostream & | os | ||

| ) |

| void sample::printPerformanceReport | ( | const std::vector< InferenceTrace > & | trace, |

| const ReportingOptions & | reporting, | ||

| float | warmupMs, | ||

| int | queries, | ||

| std::ostream & | os | ||

| ) |

Print and summarize a timing trace.

| void sample::exportJSONTrace | ( | const std::vector< InferenceTrace > & | trace, |

| const std::string & | fileName | ||

| ) |

Printed format: [ value, ...] value ::= { "start enq : time, "end enq" : time, "start in" : time, "end in" : time, "start compute" : time, "end compute" : time, "start out" : time, "in" : time, "compute" : time, "out" : time, "latency" : time, "end to end" : time}.

Export a timing trace to JSON file.

| void sample::dumpInputs | ( | const nvinfer1::IExecutionContext & | context, |

| const Bindings & | bindings, | ||

| std::ostream & | os | ||

| ) |

Print input tensors to stream.

| void sample::dumpOutputs | ( | const nvinfer1::IExecutionContext & | context, |

| const Bindings & | bindings, | ||

| std::ostream & | os | ||

| ) |

Print output tensors to stream.

| void sample::exportJSONOutput | ( | const nvinfer1::IExecutionContext & | context, |

| const Bindings & | bindings, | ||

| const std::string & | fileName | ||

| ) |

Export output tensors to JSON file.

|

inline |

|

inline |

| void sample::printEpilog | ( | std::vector< InferenceTime > | timings, |

| float | percentile, | ||

| int | queries, | ||

| std::ostream & | os | ||

| ) |

Print the performance summary of a trace.

|

inline |

|

inline |

|

inline |

|

inline |

|

inline |

|

inline |

|

inline |

|

inline |

|

inline |

|

inline |

|

inline |

|

inline |

|

inline |

|

inline |

| Logger sample::gLogger {Logger::Severity::kINFO} |

| LogStreamConsumer sample::gLogVerbose {LOG_VERBOSE(gLogger)} |

| LogStreamConsumer sample::gLogInfo {LOG_INFO(gLogger)} |

| LogStreamConsumer sample::gLogWarning {LOG_WARN(gLogger)} |

| LogStreamConsumer sample::gLogError {LOG_ERROR(gLogger)} |

| LogStreamConsumer sample::gLogFatal {LOG_FATAL(gLogger)} |

|

constexpr |

|

constexpr |

|

constexpr |

|

constexpr |

|

constexpr |

|

constexpr |

|

constexpr |

|

constexpr |

|

constexpr |

|

constexpr |

|

constexpr |

|

constexpr |

|

constexpr |