Classes | |

| struct | Enqueue |

| class | EnqueueExplicit |

| Functor to enqueue inference with explict batch. More... | |

| class | EnqueueGraph |

| Functor to enqueue inference from CUDA Graph. More... | |

| class | EnqueueImplicit |

| Functor to enqueue inference with implict batch. More... | |

| class | Iteration |

| Inference iteration and streams management. More... | |

| struct | SyncStruct |

| Threads synchronization structure. More... | |

Typedefs | |

| using | TimePoint = std::chrono::time_point< std::chrono::high_resolution_clock > |

| using | EnqueueFunction = std::function< void(TrtCudaStream &)> |

| using | MultiStream = std::array< TrtCudaStream, static_cast< int >(StreamType::kNUM)> |

| using | MultiEvent = std::array< std::unique_ptr< TrtCudaEvent >, static_cast< int >(EventType::kNUM)> |

| using | EnqueueTimes = std::array< TimePoint, 2 > |

| using | IterationStreams = std::vector< std::unique_ptr< Iteration > > |

Enumerations | |

| enum | StreamType : int { StreamType::kINPUT = 0, StreamType::kCOMPUTE = 1, StreamType::kOUTPUT = 2, StreamType::kNUM = 3 } |

| enum | EventType : int { EventType::kINPUT_S = 0, EventType::kINPUT_E = 1, EventType::kCOMPUTE_S = 2, EventType::kCOMPUTE_E = 3, EventType::kOUTPUT_S = 4, EventType::kOUTPUT_E = 5, EventType::kNUM = 6 } |

Functions | |

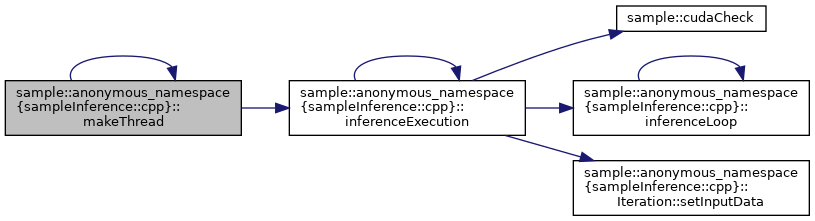

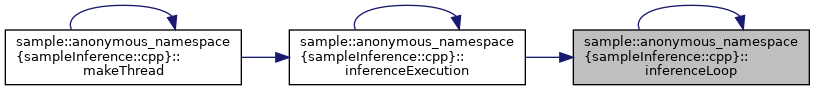

| void | inferenceLoop (IterationStreams &iStreams, const TimePoint &cpuStart, const TrtCudaEvent &gpuStart, int iterations, float maxDurationMs, float warmupMs, std::vector< InferenceTrace > &trace, bool skipTransfers) |

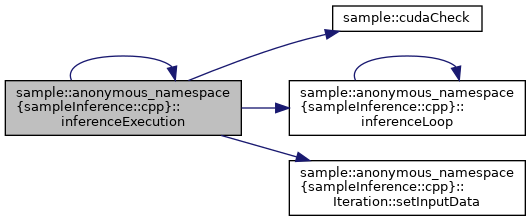

| void | inferenceExecution (const InferenceOptions &inference, InferenceEnvironment &iEnv, SyncStruct &sync, int offset, int streams, int device, std::vector< InferenceTrace > &trace) |

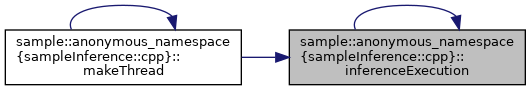

| std::thread | makeThread (const InferenceOptions &inference, InferenceEnvironment &iEnv, SyncStruct &sync, int thread, int streamsPerThread, int device, std::vector< InferenceTrace > &trace) |

| using sample::anonymous_namespace{sampleInference.cpp}::TimePoint = typedef std::chrono::time_point<std::chrono::high_resolution_clock> |

| using sample::anonymous_namespace{sampleInference.cpp}::EnqueueFunction = typedef std::function<void(TrtCudaStream&)> |

| using sample::anonymous_namespace{sampleInference.cpp}::MultiStream = typedef std::array<TrtCudaStream, static_cast<int>(StreamType::kNUM)> |

| using sample::anonymous_namespace{sampleInference.cpp}::MultiEvent = typedef std::array<std::unique_ptr<TrtCudaEvent>, static_cast<int>(EventType::kNUM)> |

| using sample::anonymous_namespace{sampleInference.cpp}::EnqueueTimes = typedef std::array<TimePoint, 2> |

| using sample::anonymous_namespace{sampleInference.cpp}::IterationStreams = typedef std::vector<std::unique_ptr<Iteration> > |

|

strong |

| void sample::anonymous_namespace{sampleInference.cpp}::inferenceLoop | ( | IterationStreams & | iStreams, |

| const TimePoint & | cpuStart, | ||

| const TrtCudaEvent & | gpuStart, | ||

| int | iterations, | ||

| float | maxDurationMs, | ||

| float | warmupMs, | ||

| std::vector< InferenceTrace > & | trace, | ||

| bool | skipTransfers | ||

| ) |

| void sample::anonymous_namespace{sampleInference.cpp}::inferenceExecution | ( | const InferenceOptions & | inference, |

| InferenceEnvironment & | iEnv, | ||

| SyncStruct & | sync, | ||

| int | offset, | ||

| int | streams, | ||

| int | device, | ||

| std::vector< InferenceTrace > & | trace | ||

| ) |

|

inline |