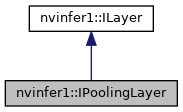

A Pooling layer in a network definition. More...

Public Member Functions | |

| virtual void | setPoolingType (PoolingType type)=0 |

| Set the type of activation to be performed. More... | |

| virtual PoolingType | getPoolingType () const =0 |

| Get the type of activation to be performed. More... | |

| __attribute__ ((deprecated)) virtual void setWindowSize(DimsHW windowSize)=0 | |

| Set the window size for pooling. More... | |

| __attribute__ ((deprecated)) virtual DimsHW getWindowSize() const =0 | |

| Get the window size for pooling. More... | |

| __attribute__ ((deprecated)) virtual void setStride(DimsHW stride)=0 | |

| Set the stride for pooling. More... | |

| __attribute__ ((deprecated)) virtual DimsHW getStride() const =0 | |

| Get the stride for pooling. More... | |

| __attribute__ ((deprecated)) virtual void setPadding(DimsHW padding)=0 | |

| Set the padding for pooling. More... | |

| __attribute__ ((deprecated)) virtual DimsHW getPadding() const =0 | |

| Get the padding for pooling. More... | |

| virtual void | setBlendFactor (float blendFactor)=0 |

| Set the blending factor for the max_average_blend mode: max_average_blendPool = (1-blendFactor)*maxPool + blendFactor*avgPool blendFactor is a user value in [0,1] with the default value of 0.0 This value only applies for the kMAX_AVERAGE_BLEND mode. More... | |

| virtual float | getBlendFactor () const =0 |

| Get the blending factor for the max_average_blend mode: max_average_blendPool = (1-blendFactor)*maxPool + blendFactor*avgPool blendFactor is a user value in [0,1] with the default value of 0.0 In modes other than kMAX_AVERAGE_BLEND, blendFactor is ignored. More... | |

| virtual void | setAverageCountExcludesPadding (bool exclusive)=0 |

| Set whether average pooling uses as a denominator the overlap area between the window and the unpadded input. More... | |

| virtual bool | getAverageCountExcludesPadding () const =0 |

| Get whether exclusive pooling uses as a denominator the overlap area betwen the window and the unpadded input. More... | |

| virtual void | setPrePadding (Dims padding)=0 |

| Set the multi-dimension pre-padding for pooling. More... | |

| virtual Dims | getPrePadding () const =0 |

| Get the pre-padding. More... | |

| virtual void | setPostPadding (Dims padding)=0 |

| Set the multi-dimension post-padding for pooling. More... | |

| virtual Dims | getPostPadding () const =0 |

| Get the padding. More... | |

| virtual void | setPaddingMode (PaddingMode paddingMode)=0 |

| Set the padding mode. More... | |

| virtual PaddingMode | getPaddingMode () const =0 |

| Get the padding mode. More... | |

| virtual void | setWindowSizeNd (Dims windowSize)=0 |

| Set the multi-dimension window size for pooling. More... | |

| virtual Dims | getWindowSizeNd () const =0 |

| Get the multi-dimension window size for pooling. More... | |

| virtual void | setStrideNd (Dims stride)=0 |

| Set the multi-dimension stride for pooling. More... | |

| virtual Dims | getStrideNd () const =0 |

| Get the multi-dimension stride for pooling. More... | |

| virtual void | setPaddingNd (Dims padding)=0 |

| Set the multi-dimension padding for pooling. More... | |

| virtual Dims | getPaddingNd () const =0 |

| Get the multi-dimension padding for pooling. More... | |

| virtual LayerType | getType () const =0 |

| Return the type of a layer. More... | |

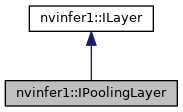

| virtual void | setName (const char *name)=0 |

| Set the name of a layer. More... | |

| virtual const char * | getName () const =0 |

| Return the name of a layer. More... | |

| virtual int32_t | getNbInputs () const =0 |

| Get the number of inputs of a layer. More... | |

| virtual ITensor * | getInput (int32_t index) const =0 |

| Get the layer input corresponding to the given index. More... | |

| virtual int32_t | getNbOutputs () const =0 |

| Get the number of outputs of a layer. More... | |

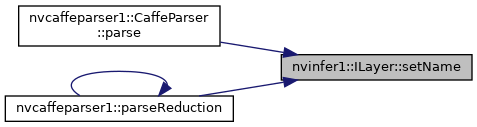

| virtual ITensor * | getOutput (int32_t index) const =0 |

| Get the layer output corresponding to the given index. More... | |

| virtual void | setInput (int32_t index, ITensor &tensor)=0 |

| Replace an input of this layer with a specific tensor. More... | |

| virtual void | setPrecision (DataType dataType)=0 |

| Set the computational precision of this layer. More... | |

| virtual DataType | getPrecision () const =0 |

| get the computational precision of this layer More... | |

| virtual bool | precisionIsSet () const =0 |

| whether the computational precision has been set for this layer More... | |

| virtual void | resetPrecision ()=0 |

| reset the computational precision for this layer More... | |

| virtual void | setOutputType (int32_t index, DataType dataType)=0 |

| Set the output type of this layer. More... | |

| virtual DataType | getOutputType (int32_t index) const =0 |

| get the output type of this layer More... | |

| virtual bool | outputTypeIsSet (int32_t index) const =0 |

| whether the output type has been set for this layer More... | |

| virtual void | resetOutputType (int32_t index)=0 |

| reset the output type for this layer More... | |

Protected Member Functions | |

| virtual | ~IPoolingLayer () |

A Pooling layer in a network definition.

The layer applies a reduction operation within a window over the input.

|

inlineprotectedvirtual |

|

pure virtual |

Set the type of activation to be performed.

DLA only supports kMAX and kAVERAGE pooling types.

|

pure virtual |

Get the type of activation to be performed.

|

pure virtual |

Set the window size for pooling.

If executing this layer on DLA, both height and width of window size must be in the range [1,8].

|

pure virtual |

Get the window size for pooling.

|

pure virtual |

Set the stride for pooling.

Default: 1

If executing this layer on DLA, both height and width of stride must be in the range [1,16].

|

pure virtual |

Get the stride for pooling.

|

pure virtual |

Set the padding for pooling.

Default: 0

If executing this layer on DLA, both height and width of padding must be in the range [0,7].

|

pure virtual |

Get the padding for pooling.

Default: 0

|

pure virtual |

Set the blending factor for the max_average_blend mode: max_average_blendPool = (1-blendFactor)*maxPool + blendFactor*avgPool blendFactor is a user value in [0,1] with the default value of 0.0 This value only applies for the kMAX_AVERAGE_BLEND mode.

Since DLA does not support kMAX_AVERAGE_BLEND, blendFactor is ignored on the DLA.

|

pure virtual |

Get the blending factor for the max_average_blend mode: max_average_blendPool = (1-blendFactor)*maxPool + blendFactor*avgPool blendFactor is a user value in [0,1] with the default value of 0.0 In modes other than kMAX_AVERAGE_BLEND, blendFactor is ignored.

|

pure virtual |

Set whether average pooling uses as a denominator the overlap area between the window and the unpadded input.

If this is not set, the denominator is the overlap between the pooling window and the padded input.

If executing this layer on the DLA, only inclusive padding is supported.

Default: true

If executing this layer on the DLA, this is ignored as the DLA does not support exclusive padding.

|

pure virtual |

Get whether exclusive pooling uses as a denominator the overlap area betwen the window and the unpadded input.

|

pure virtual |

Set the multi-dimension pre-padding for pooling.

The start of the input will be padded by this number of elements in each dimension. Padding value depends on pooling type, -inf is used for max pooling and zero padding for average pooling.

Default: (0, 0, ..., 0)

If executing this layer on DLA, only support 2D padding, both height and width of padding must be in the range [0,7].

|

pure virtual |

Get the pre-padding.

|

pure virtual |

Set the multi-dimension post-padding for pooling.

The end of the input will be padded by this number of elements in each dimension. Padding value depends on pooling type, -inf is used for max pooling and zero padding for average pooling.

Default: (0, 0, ..., 0)

If executing this layer on DLA, only support 2D padding, both height and width of padding must be in the range [0,7].

|

pure virtual |

Get the padding.

|

pure virtual |

Set the padding mode.

Padding mode takes precedence if both setPaddingMode and setPre/PostPadding are used.

Default: kEXPLICIT_ROUND_DOWN

|

pure virtual |

|

pure virtual |

Set the multi-dimension window size for pooling.

If executing this layer on DLA, only support 2D window size, both height and width of window size must be in the range [1,8].

|

pure virtual |

Get the multi-dimension window size for pooling.

|

pure virtual |

Set the multi-dimension stride for pooling.

Default: (1, 1, ..., 1)

If executing this layer on DLA, only support 2D stride, both height and width of stride must be in the range [1,16].

|

pure virtual |

Get the multi-dimension stride for pooling.

|

pure virtual |

Set the multi-dimension padding for pooling.

The input will be padded by this number of elements in each dimension. Padding is symmetric. Padding value depends on pooling type, -inf is used for max pooling and zero padding for average pooling.

Default: (0, 0, ..., 0)

If executing this layer on DLA, only support 2D padding, both height and width of padding must be in the range [0,7].

|

pure virtual |

Get the multi-dimension padding for pooling.

If the padding is asymmetric, the pre-padding is returned.

|

pure virtualinherited |

Return the type of a layer.

|

pure virtualinherited |

Set the name of a layer.

This method copies the name string.

|

pure virtualinherited |

|

pure virtualinherited |

Get the number of inputs of a layer.

|

pure virtualinherited |

Get the layer input corresponding to the given index.

| index | The index of the input tensor. |

|

pure virtualinherited |

Get the number of outputs of a layer.

|

pure virtualinherited |

Get the layer output corresponding to the given index.

|

pure virtualinherited |

Replace an input of this layer with a specific tensor.

| index | the index of the input to modify. |

| tensor | the new input tensor Except for IShuffleLayer, ISliceLayer, IResizeLayer and ILoopOutputLayer, this method cannot change the number of inputs to a layer. The index argument must be less than the value of getNbInputs(). |

See overloaded setInput() comments for the layers special behavior.

| index | the index of the input to modify. |

| tensor | the new input tensor |

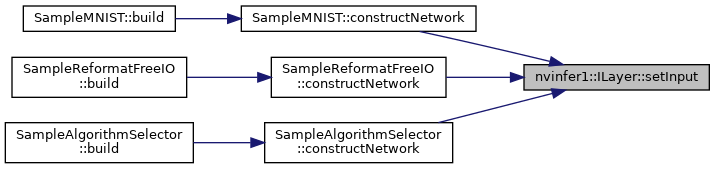

Implemented in nvinfer1::IFillLayer, nvinfer1::ILoopOutputLayer, nvinfer1::IRecurrenceLayer, nvinfer1::IResizeLayer, nvinfer1::ISliceLayer, nvinfer1::IShuffleLayer, nvinfer1::IDeconvolutionLayer, nvinfer1::IFullyConnectedLayer, and nvinfer1::IConvolutionLayer.

|

pure virtualinherited |

Set the computational precision of this layer.

Setting the precision allows TensorRT to choose implementation which run at this computational precision. Layer input type would also get inferred from layer computational precision. TensorRT could still choose a non-conforming fastest implementation ignoring set layer precision. Use BuilderFlag::kSTRICT_TYPES to force choose implementations with requested precision. In case no implementation is found with requested precision, TensorRT would choose available fastest implementation. If precision is not set, TensorRT will select the layer computational precision and layer input type based on performance considerations and the flags specified to the builder.

| precision | the computational precision. |

|

pure virtualinherited |

get the computational precision of this layer

|

pure virtualinherited |

whether the computational precision has been set for this layer

|

pure virtualinherited |

reset the computational precision for this layer

|

pure virtualinherited |

Set the output type of this layer.

Setting the output type constrains TensorRT to choose implementations which generate output data with the given type. If it is not set, TensorRT will select output type based on layer computational precision. TensorRT could still choose non-conforming output type based on fastest implementation. Use BuilderFlag::kSTRICT_TYPES to force choose requested output type. In case layer precision is not specified, output type would depend on chosen implementation based on performance considerations and the flags specified to the builder.

This method cannot be used to set the data type of the second output tensor of the TopK layer. The data type of the second output tensor of the topK layer is always Int32. Also the output type of all layers that are shape operations must be DataType::kINT32, and all attempts to set the output type to some other data type will be ignored except for issuing an error message.

Note that the layer output type is generally not identical to the data type of the output tensor, as TensorRT may insert implicit reformatting operations to convert the former to the latter. Calling layer->setOutputType(i, type) has no effect on the data type of the i-th output tensor of layer, and users need to call layer->getOutput(i)->setType(type) to change the tensor data type. This is particularly relevant if the tensor is marked as a network output, since only setType() [but not setOutputType()] will affect the data representation in the corresponding output binding.

| index | the index of the output to set |

| dataType | the type of the output |

|

pure virtualinherited |

get the output type of this layer

| index | the index of the output |

|

pure virtualinherited |

whether the output type has been set for this layer

| index | the index of the output |

|

pure virtualinherited |

reset the output type for this layer

| index | the index of the output |